The use of Artificial Intelligence is being applied in a multitude of uses, still with its limits and limitations to be defined. One of the concerns that can arise, especially in the case of ChatGPT-type conversational AI, is how much they should count and how much they should be silent … and that red line seems to be clearer than we think.

There is a saying that goes “I am worth more for what I keep quiet than for what I speak” and that is precisely the new added value that is wanted to be given to an AI: to avoid revealing secrets.

How to prevent an AI from sharing secrets

Take Github for example, who have updated the AI model of Copilot , a programming assistant that generates real-time source code and feature recommendations in Visual Studio, claiming it is now more secure and powerful.

To do this, the new AI model, which will be released to users this week, delivers better quality suggestions in less time, further improving the efficiency of software developers by increasing the acceptance rate. Copilot will introduce with this update a new paradigm called “Fill-In-the-Middle”, which uses a library of known code commands and leaves a gap for the AI tool to fill , making it more relevant and consistent with the rest. of the project code.

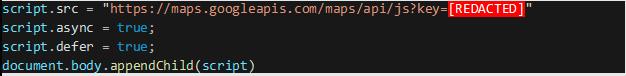

One of the most notable enhancements in this Copilot update is the introduction of a new vulnerability filtering system that will help identify and block insecure hints such as scrambled credentials, path injections, and SQL injections . The software company says that Copilot can generate secrets like keys, credentials, and passwords that look in the training data on novel strings. However, these cannot be used as they are completely fictional and will be blocked by the new filtering system.

Strong criticism for revealing secrets

The appearance of these secrets in Copilot’s code hints has drawn strong criticism from the software development community, with many accusing Microsoft of using large publicly available data sets to train its AI models without regard to the security, even including sets that contain secrets by mistake .

By blocking unsafe suggestions in the real-time editor, GitHub could also provide some resistance against dataset poisoning attacks that aim to covertly train AI assistants to make suggestions that contain malicious payloads.

At this time, Copilot’s LLMs (Long Learning Models) are still being trained to distinguish between vulnerable and non-vulnerable code patterns , so the AI model’s performance on that front is expected to gradually improve in the near future. .