One of the technologies that we see most repeated when looking at the specifications of a monitor is HDR, but for some time now we have not seen the transition from what we call SDR to HDR in media such as video games, not to mention We haven’t seen a single GPU designed for HDR.

If you have ever wondered how it is possible that we have not seen the definitive leap towards HDR screens leaving aside the SDR, keep reading that you will discover the reason.

What is HDR and what differentiates it from SDR?

HDR is the acronym for high dynamic range, it is based on increasing the amount of bits per RGB component from 8 bits to 10 bits or even 12 bits, but HDR is not based on increasing the chrominance and therefore the amount of colors, but rather their luminance, allowing a higher brightness and contrast range for each color and a better representation of these.

However, while 24-bit color monitors quickly replaced those with lower color definition, the same is not the case with HDR-based monitors where we can still find a large number of them that do not support them. So the transition has never been fully realized.

One of the reasons is that HDR is difficult to promote on a screen that does not support that range of colors, so in the age of internet marketing it is difficult to show something that is based on the visual perception of people and that they cannot see.

Chrominance and Luminance

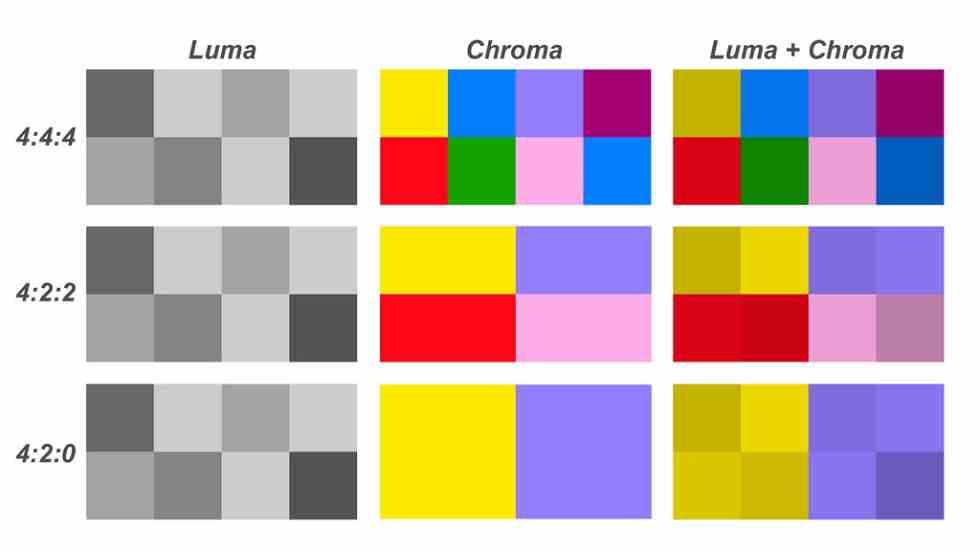

Each pixel that is represented on the screen is previously stored in an image buffer that stores the values of the three RGB components: Red, Green and Blue. But, the peculiarity of this way of storing the information is that not only the chrominance is stored but also the luminance level, which is the level of color or brightness of that color.

In the old televisions in which there was no color, the images were represented by luminance values and when the color came both values were separated. But in the case of computing, although cathode ray tube screens were used for almost three decades, actually at the level of internal representation in the image buffer, both were represented in the same way.

For a long time, the maximum representation in color was 24 bits of color, which gives 16.7 million different combinations, this type of screen is called SDR screens, to differentiate them from HDR screens, which support a greater amount of information per pixel, with 10 and even up to 12 bits per component.

HDR and real-time graphics rendering

HDR can be represented in two different ways:

- The first one is to add more bits per component to all the pixels in the image buffer.

- The second is to add a new component.

Both solutions make sense when it comes to playing still images and video files, but in the case of GPUs used to render 3D scenes in real time the thing that gets complicated, as this means that all the GPU units that they manipulate the data of the pixels on the one hand and on the other hand those who transmit that same data have to increase their precision.

This consequently has the need for GPUs with a higher number of transistors and therefore this means GPUs larger in size under the same power or less powerful if size is maintained. The solution taken by the manufacturers? The second, this has the consequence that the performance of games in HDR mode is lower than when they are rendered in SDR.

GPUs have never been designed for HDR but for SDR

If we increase the amount of information bits per pixel, logically when moving the data throughout the rendering we will find that the necessary bandwidth will be much higher, which can translate into a drop in performance if between the memory that stores the data and the unit that processes them, they do not move fast enough.

But. the movement of information is expensive within a processor, especially if we talk about energy consumption, this being one of the reasons why none of the GPUs in recent years has been prepared to work natively with values per pixel beyond of the 8 bits per component. Due to the fact that the greatest value for the design of new processors, the greatest premise in performance for a long time is the power per watt.

If, like other technologies, GPUs had been designed for HDR then said technology would have been completely standardized and now all PC hardware and software would be designed for HDR, what’s more, it would be used by everyone.