It may be an irrelevant question and more so in these times. But the truth is that both Intel and AMD are going to change their caches in their new architectures, especially in terms of hierarchies, sizes and specific functions using algorithms. So before they introduce everything, it’s good to understand basic concepts that go beyond “what is” and “how does it work”. Let’s get to know the remaining whys of something as vital as the cache memory of a CPU.

There are recurring questions over time and that over the years and technology always return. One of them is precisely why having cache memory in a CPU if we have system RAM that in the end ends up working supplying information to the processor and making HOT SWAP with the rest of the system.

Why have such a specific type of memory and why does its capacity continue to expand?

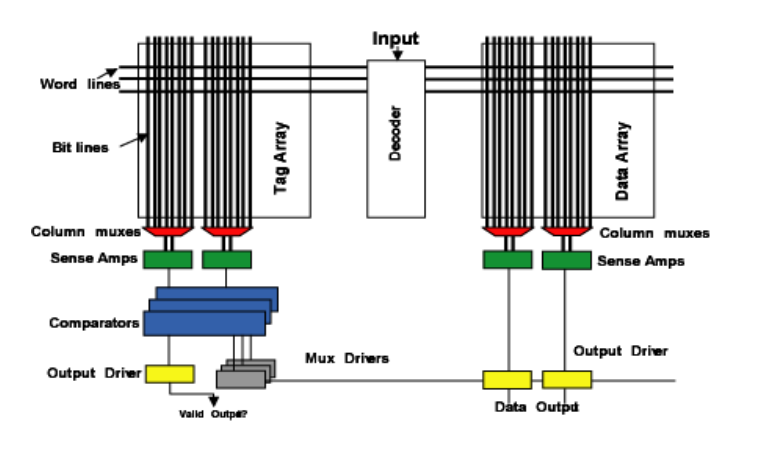

An intermediary, an easy and simple way to define the cache memory of a CPU and as such, its function is to keep the largest number of data under its belt in order to supply it to the CPU and its registers, where the real purpose of it is revealed in this process.

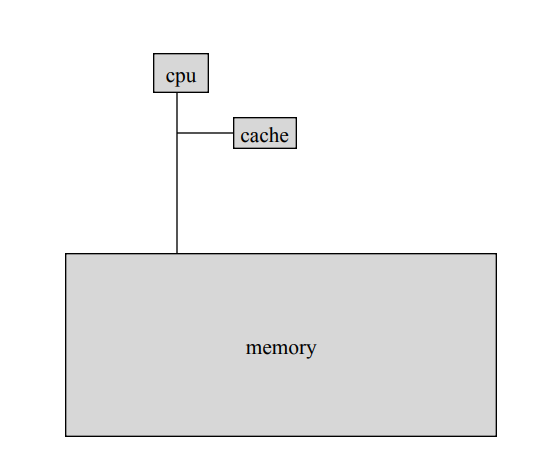

The cache memory of a CPU exists for a very simple and basic reason: to reduce the average time required so that the processor does not have to access the RAM of the system as often. That is, both Intel and AMD have this type of memory to prevent access to RAM as much as possible.

The cache memory of a CPU is in second place within the hierarchy of a processor and although it has its own, it is only surpassed by the registers, ALUs and other units that each model of CPU contains.

Advantages of including cache memory in a CPU

The main advantage as we have said is latency, since information is continuously downloaded from RAM memory in advance so that the processor is always nourished with data and can maximize its performance.

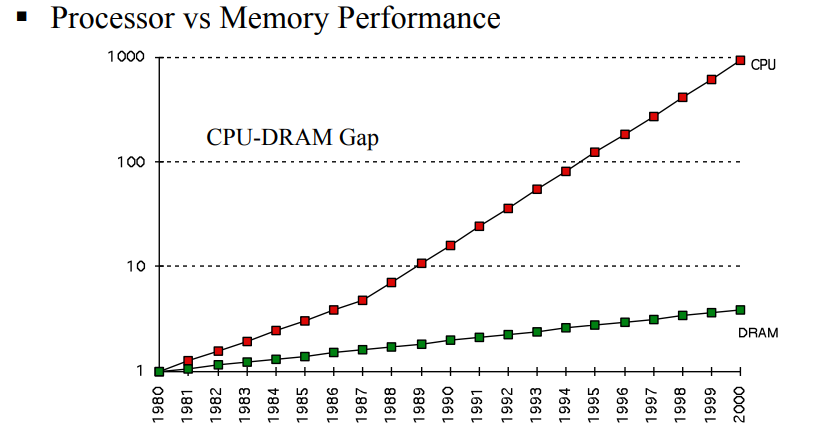

The second advantage is that its frequency can be very close to that of the processor. Lower latency and more speed implies greater internal performance and here comes its purpose. Cache memory was primarily designed to resolve the contradiction of needing RAM, but not depending on its speed , since the CPU achieves much higher cycles per second internally, achieving read and write speeds that RAM cannot even dream of. .

The cache memory avoids this ” delay “, this waiting, between which the memory supplies the data and the CPU can process it and return the information already resolved.

Hence also its hierarchy in L1, L2 and L3. Each data set according to its size and priority goes to one of the levels, where the higher it is, the smaller it is available, but the more performance it achieves.

It is an eternal fight between optimizing performance, speed, consumption and being the intermediary of the biggest bottleneck that any PC or server has: RAM memory. Therefore, having more levels does not imply higher performance as such, an optimal term between performance, latency and size is necessary, where in addition the price of this rises if the latter is increased greatly.

A balance that is difficult to maintain, but which is essential today and in the near future.