The concept of Cache DRAM consists of adding an additional level of cache between the processor and the RAM memory in order to increase the performance of the former. But, what changes does it mean in the architecture of a processor and how does this concept work? We explain it to you and incidentally which processors will use this architecture.

A few days ago an Apple patent appeared in which the use of Cache DRAM in one of its future processors was mentioned, a concept that although it may seem exotic is not, so we are going to demystify it.

DRAM memory as a cache, a contradiction

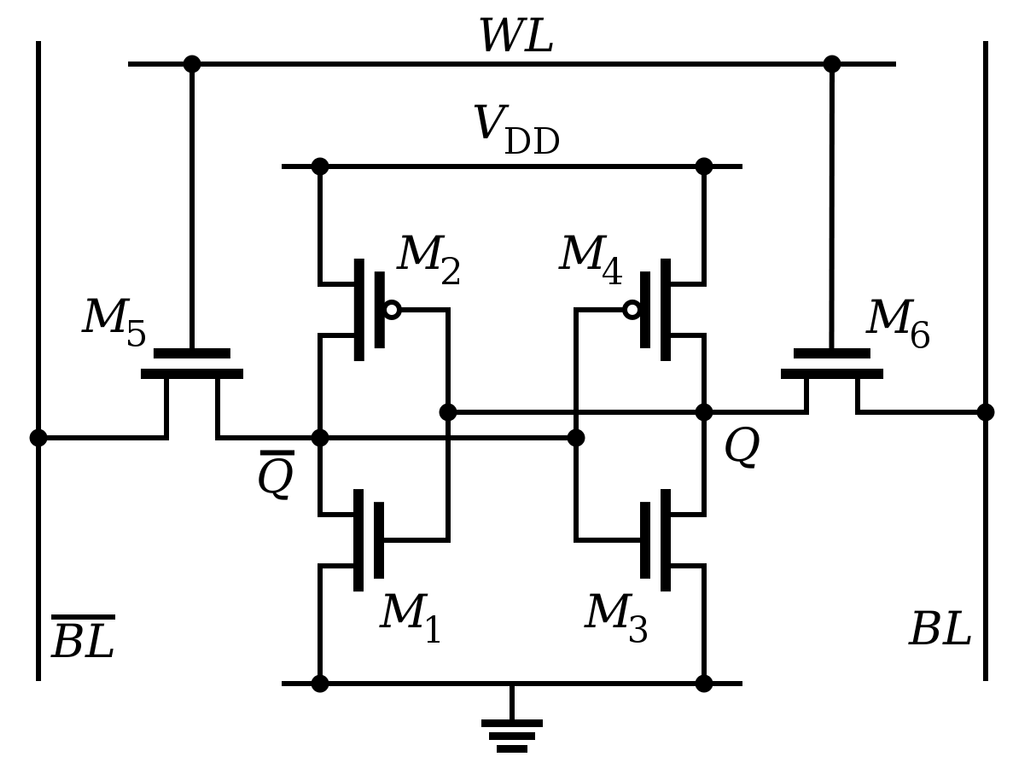

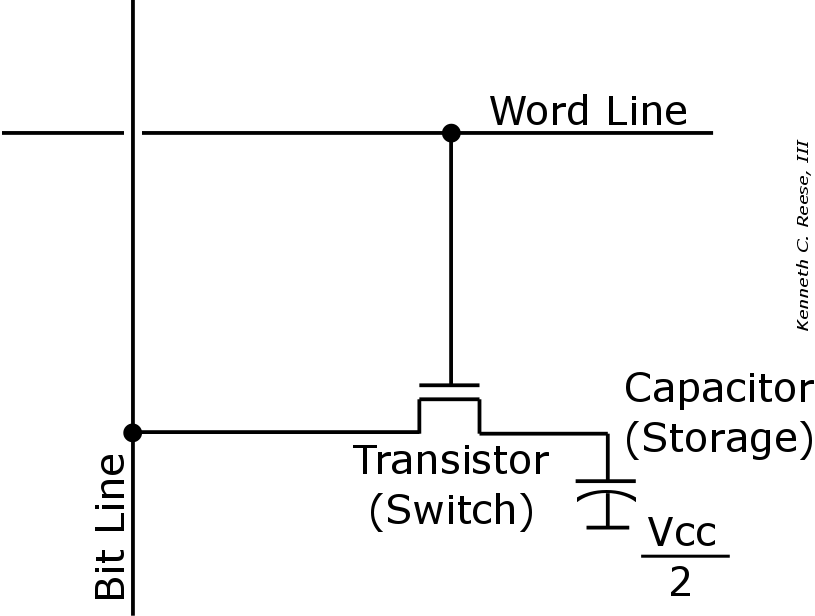

All current RAM memories that are used outside of a processor are of the DRAM or Dynamic RAM type, while the memories that are used inside the processors are Static RAM or SRAM. Both work pretty much the same in terms of how to access data, but what they are not the same is in the way they store a bit of memory.

DRAM memory is much cheaper, but by its nature it requires constant refreshment, and its access speed is slower than SRAM, so it is not generally used within processors. On the other hand, it scales in a worse way than DRAM, so despite the fact that IBM has been using DRAM memory as a last-level cache in its CPUs for high-performance computing, POWER, in its next generation they will use SRAM memory.

So the concept of cache, which is related to a memory of the SRAM type, together with the DRAM concept in principle do not match and although we have the case of IBM CPUs we are not going to talk about using DRAM memory as a cache within the processor .

DRAM cache and HBM memory as an example

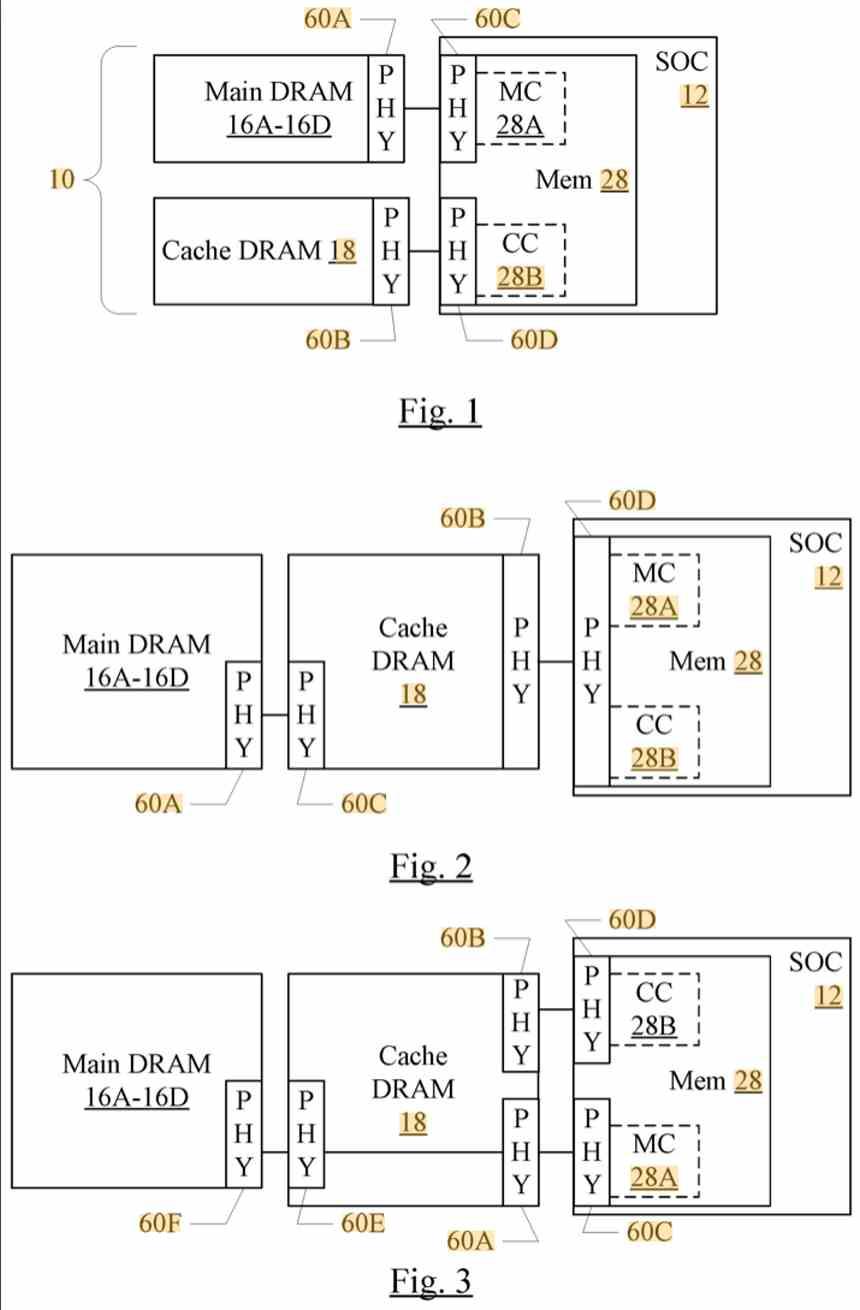

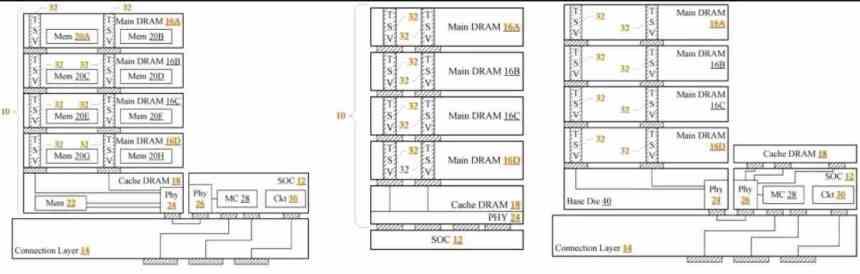

Cache DRAM is the concept of adding an additional layer in the memory hierarchy between the processor’s last-level cache and the main system memory, but built through a DRAM memory with a higher access speed and less latency than the DRAM used as main memory.

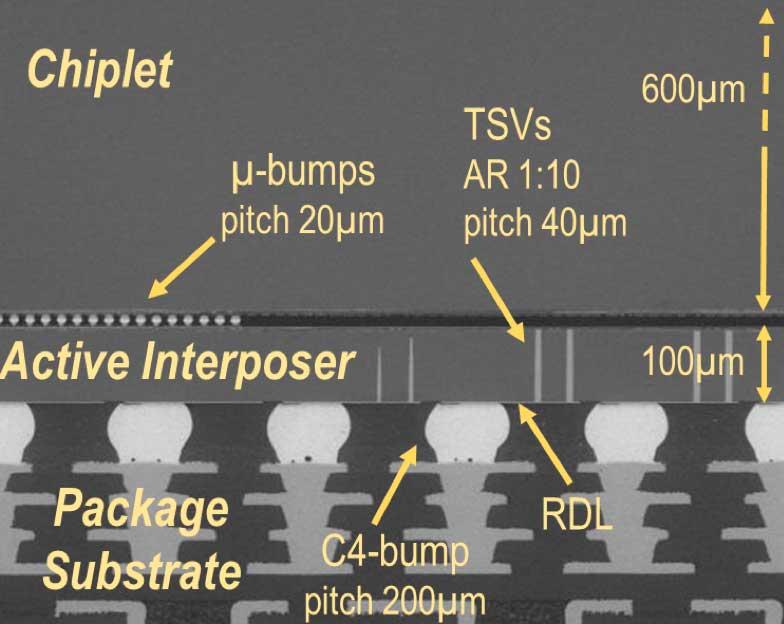

One way to achieve this is by using HBM-type memory as a DRAM cache, which is a type of DRAM memory in which different memory chips are stacked and connected vertically using a type of cabling called TSV or through-paths. silicon by the fact that they pass through the chips. This type of connection is also used for the construction of 3D-NAND memory.

Because the connection is vertical, an interposer is necessary, which is a piece of electronics in the form of a board that is responsible for communicating the processor and the HBM memory. Both the processor, whether CPU or GPU, are mounted on said interposer, which due to the short distance gives HBM memory the ability to function as a type of DRAM memory with lower latency than the classic DDR and GDDR memories.

It should be clarified that if the DRAM were closer to the processor, as a 3DIC configuration places it just above it, then the latency level compared to the HBM memory would be lower and therefore the access speed higher, due to the fact that electrons have to travel a shorter distance.

We have really used the HBM memory to give you an idea, but any type of memory in a 2.5DIC configuration works as an example.

But a standard interposer is not enough

The next problem is that a cache does not work in the same way as a RAM memory, since what the processor’s data search system does is not copy the instruction lines from RAM one by one, but rather the memory system. What cache does is copy the memory fragment where the current line of code is located in the last level of the cache.

The last level stores the cache of a processor is shared by all the cores, but as we get closer to the first level these are more private. It should be clarified that in descending order each cache level contains a fragment of the previous cache. When a processor looks for data, what it does is look for it in ascending order of cache levels, where each level has more capacity than the previous one.

But, for HBM memory to behave like a cache, then we need the element that communicates the processor with said memory, the interposer, to have the necessary circuitry to behave like a cache memory. So a conventional interposer cannot be used and it is necessary to add additional circuitry in the interposer that allows the HBM memory to behave like an additional memory cache.