One of the biggest problems with PC gaming is the mismatch between the monitor’s refresh rate and the frame rate per second that the games are running at. And no, we are not going to talk about artifacts like Tearing this time, but about something that is common, that affects the game experience and that unfortunately has been accepted as normal.

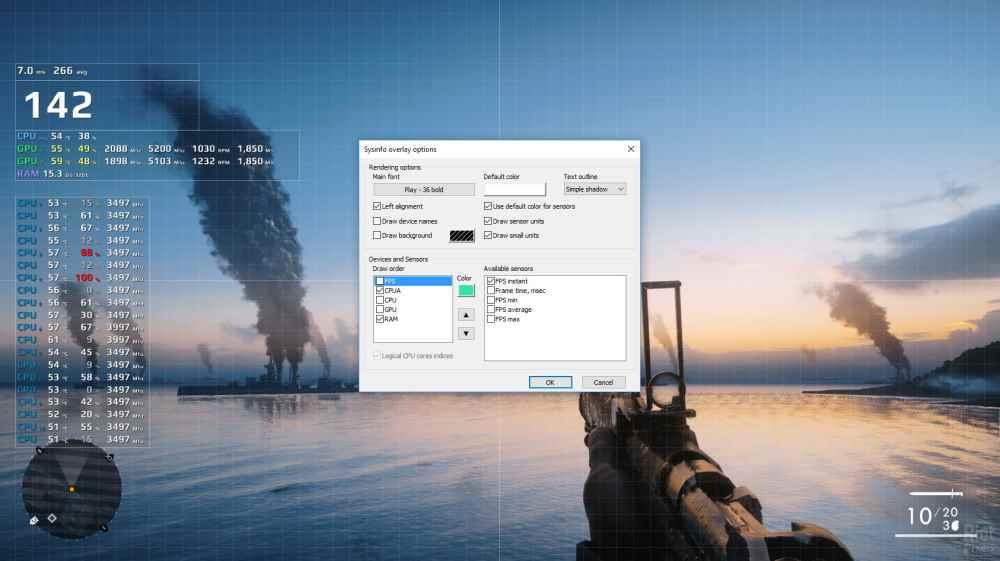

Each of the images you see on your monitor has been previously generated by the computer’s graphics card at a specific speed to be sent to the panel where it is displayed. However, many times if we start to play and place an FPS counter we will see that the figure that is displayed is not the monitor’s refresh rate. What happens when both parties are unbalanced? Well, complete frames are lost along the way.

Your graphics card does not coordinate well with your monitor

When we talk about FPS we are talking about the frame rate per second, but this does not have to be the speed at which the images are displayed on your television, since that belongs to the refresh rate. Unfortunately, both are often confused or even used interchangeably. However, they are not the same and it often happens that there are certain FPS rates that feel strange or feel strange when playing, as if the movement of things feels strange or there are things that do not add up.

In reality, ideally, the FPS of the games should be maintained at the same speed or a multiple of the monitor’s refresh rate. That is, if the panel reaches a 120 Hz refresh rate, then the frame rate in games would be 120, 60, 40 or 30 FPS, all of them exact multiples of the refresh rate so that the signal was in unison. . The solution that is given? Variable refresh rate solutions like NVIDIA‘s G-SYNC or AMD‘s FreeSync, where the monitor’s refresh rate is controlled by the game.

However, the problem is that this is an extra cost for both the monitor manufacturer and the end user and the correct solution to this would be to make sure that games do not run at a FPS speed that is not a multiple of the frame rate. refresh of the screen that we are using.

What are we referring to?

Suppose you have a video that plays at 24 frames per second, a very common speed a few years ago. If you reproduce it on a screen with a refresh rate of 60 Hz and, therefore, there is a 1:2.5 ratio between the two parts. The fact is that since it has to balance the beginning and the beginning of each frame and one cannot be generated in the middle of the other, such imbalance has an effect in the form that both parts are not coordinated.

Think of it as a kind of calendar that is not mathematically precise. So the imbalance accumulates over time from one frame to another until it becomes too evident that it gives us the feeling that the game is behind in latency or that something is wrong between the response time of the game, what we see on the screen, and our actions.

The solution has been in use on consoles for some time

And this is none other than the dynamic resolution, in which a refresh rate is marked and in order to avoid that this is not constant, the resolution varies according to the level of load of the game. Unless you are a very picky person, many users do not notice it and it has become an efficient way to avoid certain image artifacts without having to force the user to buy expensive monitors in order to avoid certain image problems that occur. .

In other words, it is about achieving parity between the refresh rate and the speed at which the game images are generated from the title itself so that fewer people suffer from said problem.