From HBM memories , through 3D NAND chips , there are many chips in the hardware market that are built using what is called TSV, acronyms that mean “Through Silicon Vias” and translates as via silicon by the fact that they vertically cross the chips and allow vertical intercommunication between them. In this article we are going to tell you what they are, how they work and what they are for.

In the hardware world, it is often talked about in terms that have to do with speed rates, be it the bandwidth of a memory, the clock cycles of a processor, how many times a processor does a type of calculation per second , etc., but very seldom do we ask ourselves how the chips intercommunicate with each other and if this matters.

![]()

In this article we are going to talk about a technology called TSV, which is used to intercommunicate chips, whatever they are.

What are silicon or TSV pathways?

If we look at most motherboards we can see two things: the first is that most connections between chips are horizontal, which means that the paths on the board that send the signal between chips communicate horizontally.

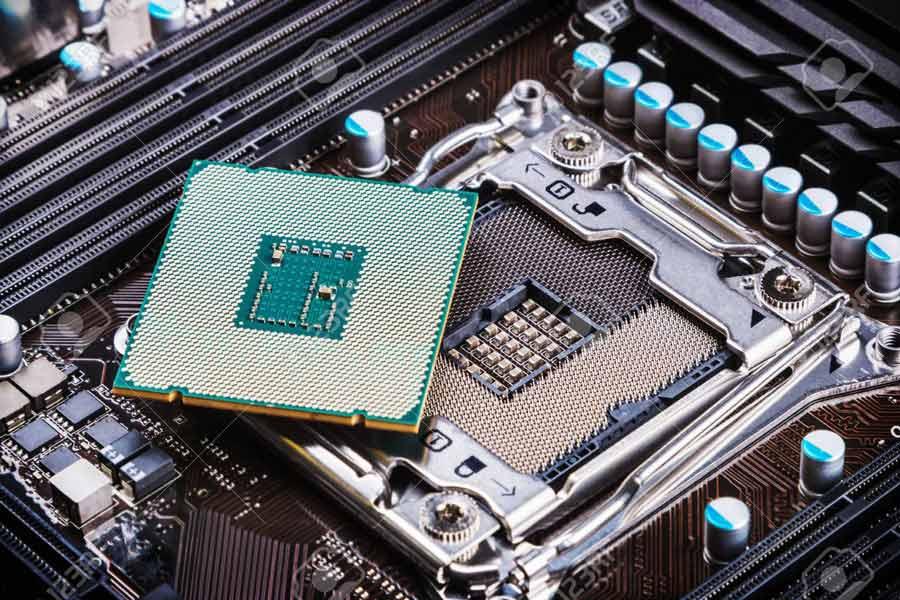

Then we have the case of CPUs, which are placed on top of an interposer that we call a socket and in which the processors are connected vertically on them.

But in general 99% of the time we observe that there are usually no chips vertically connected to each other, and despite the fact that the design of chips and processors is evolving in that direction and there are already some examples of this type on the market. But how do we do it to intercommunicate two or more chips vertically?

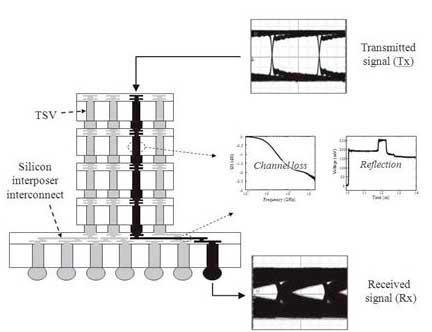

Well, precisely this is done with what are called pathways through silicon, which vertically cross the different chips or layers of the same chip that make up the stack, that is why they are called “through” silicon because they literally pass through.

The applications and advantages of using TSVs

One of the applications of TSV is that it allows the separation of complex processors made up of different parts on several different chips with the added advantage that vertical connectivity allows a greater number of connections, which helps to achieve higher bandwidths without the need for a Very high clock frequency that increases power consumption during data transmission.

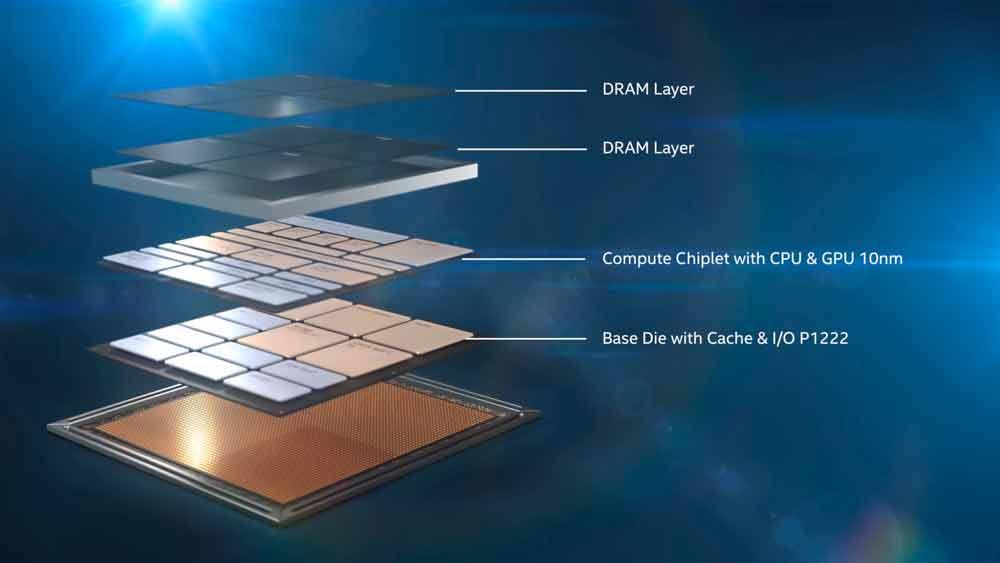

For example, in the future we will see CPUs and GPUs where their last-level cache will be off the chip, with the same bandwidth but with a storage capacity several times greater, which will greatly increase performance. We also have the example of the Intel Foveros that uses TSV to communicate the two parts of the Lakefield SoC, the Compute Chiplet with the Base Die where the system I / O is.

The reason for dividing a processor into different parts is in the fact that as a chip gets bigger the chances of an error in the circuitry grow more and more, and therefore the number of good chips without failures that They can be used is less and those that do well have to pay the cost of those that have failed; This means that reducing the size of the chips theoretically reduces the overall cost, although later we will see that this is not totally the case.

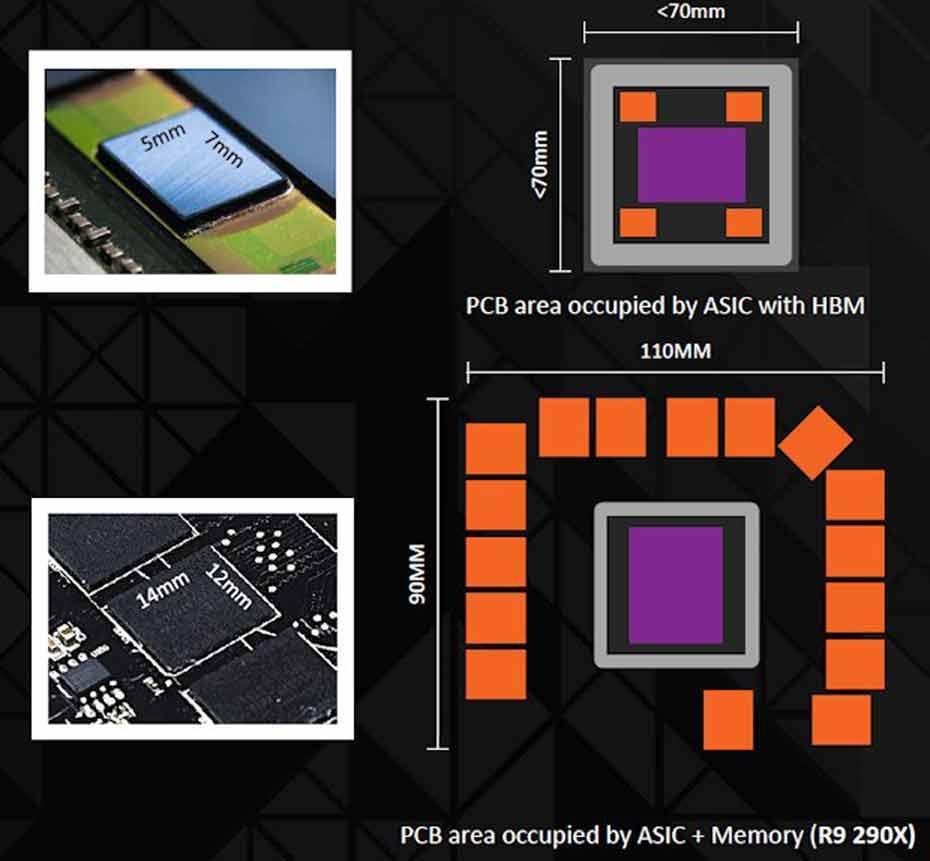

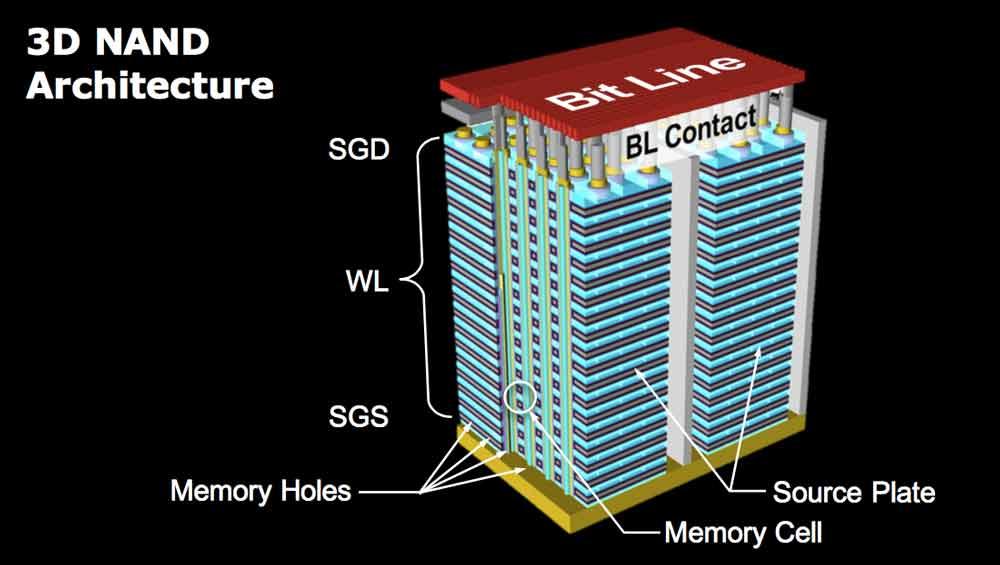

The second application has to do with the occupied space; the fact of being able to stack several chips vertically greatly reduces the area they occupy, since they are not scattered around the board, the most famous example of this being the HBM memory used as VRAM for certain graphics processors, but we have other examples such as Samsung‘s V-NAND memory, which stacks several NAND Flash memory chips on top of each other.

Other lesser-known options are the combination of logic and memory, in which the memory is placed on top of a processor, the best known example being Wide I / O memory, a type of memory that appeared in smartphones a few years ago and consisted of a memory on top of the SoC interconnected by way through silicon.

Why is the adoption of silicon pathways so slow?

The TSV has several inherent problems, which has meant that despite being a very promising technology on paper for decades, it has not quite taken off and has remained a way to manufacture chips for very small markets but with high margins.

- Their first problem is that it is an extremely expensive technology to implement that requires profound changes in the manufacturing lines of many companies, which have been making chips without TSV for years and for many applications the conventional manufacturing process has been shown to be good enough. .

- The second problem is that if a part of what makes up the vertical structure fails completely, then the entire structure has to be thrown away, and this makes systems interconnected via TSV much more expensive to manufacture. The example of HBM memory is significant in this, its cost is so high that it is not viable as a memory for the consumer market.

- The third problem is thermal choking, the chips reach the clock speeds they reach under certain temperature conditions, which are affected if there is another chip nearby that also emits heat. We may have, for example, two processors that separately reach 1 GHz each but together placed vertically in a TSV structure reach only 0.8 GHz each due to temperature problems.

The third point is the one that most worries engineers today and cooling mechanisms are being developed to keep the chips that make up the stack as cold as possible, to avoid thermal drowning problems.