In the history of computing, there has always been one supercomputer that has stood out above the rest. That is why we have decided to do a historical review of these beasts of computing, which have not only been unique, but have also broken records and set milestones. What have been the most outstanding supercomputers in history?

Supercomputers are what we colloquially know as “NASA computers”, referring to an extremely powerful computer that is unique in the world at all times. Its enormous computing power is used to solve technical and scientific problems that would not be possible otherwise.

The fact that they are unique constructions means that many times they are not limited to being the cluster of server hardware, but there have been cases in history in which hardware components such as new processor architectures have been created. Furthermore, a large part of the technological advances that we have seen appear for PCs have had as their origin the development of a supercomputer and then be implemented at scale.

In short, when we refer to supercomputers we are referring to the most powerful hardware of each moment in the history of computing.

The CDC 6600, the first of the supercomputers in history

We owe the concept of supercomputers to the computer scientist Seymour Cray who was the first to propose the general architecture of one. Cray worked in the data control center of the United States Army and when the CDC did not let him realize his invention he threatened to leave, in the end they gave in and this allowed him to create the first supercomputer in history, the CDC 6600.

The CDC 6600 was the most powerful supercomputer from 1964 to 1969, it was a complex piece for the time composed of 400,000 transistors in total, a clock speed of 40 MHz and a floating point unit at 3 MFLOPS. Let’s not forget that the first home computers that came out a decade later ran at speeds between 1 MHz and 4 MHz, were made up of a few thousand transistors, and lacked a floating point unit.

The most powerful computer out there at the time was the IBM 7030 and the CDC 6600 outperformed it in every respect, making Seymour Cray and his designs a benchmark in high-performance computing, but the CDC 6600 only it was the beginning of the story.

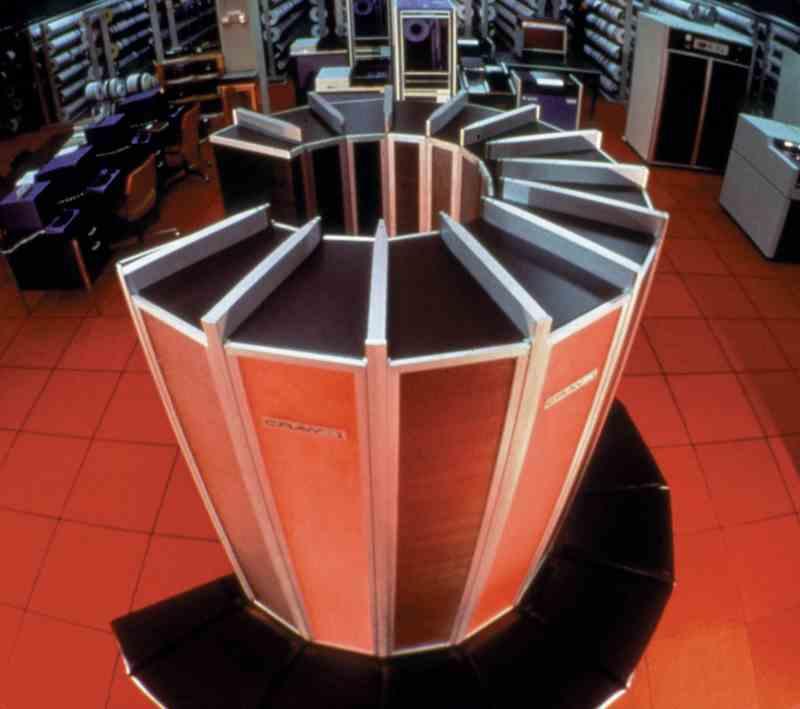

Cray-1, the supercomputers that saw the birth of the SIMD unit

Today SIMD units are found in all CPUs for devices of all kinds, but we owe their existence to the second supercomputer in history. Which was also designed by Seymour Cray, but this time already under the company named with his surname, Cray Research and the first of his supercomputers.

The Cray-1 was launched in 1975 and used an 80 MHz CPU and had a built-in 64-bit precision floating point SIMD unit, which was a giant leap that allowed a jump of the CDC’s 3 MFLOPS of power. 6600 at 160 MFLOPS in the Cray-1. To give you an idea of what this potentially meant we have to say that it was not until the mid-90s that we did not see a CPU in PC with the same power as the Cray-1 in floating point and it was not until the appearance of Intel‘s SSE technology and AMD‘s 3Dnow we didn’t see a 64-bit floating point SIMD in a PC CPU.

In 1982 Cray Research launched an improved version of its supercomputer in the form of its Cray X-MP, where the initials “MP” come from multiprocessor and had not one but four of them, which reached 105 MHz each and with a 820 MFLOPS horsepower, but your swan song came in the form of the Cray 2 released in 1985 that increased the horsepower to 1.9 GFLOPS. The Cray-2 was

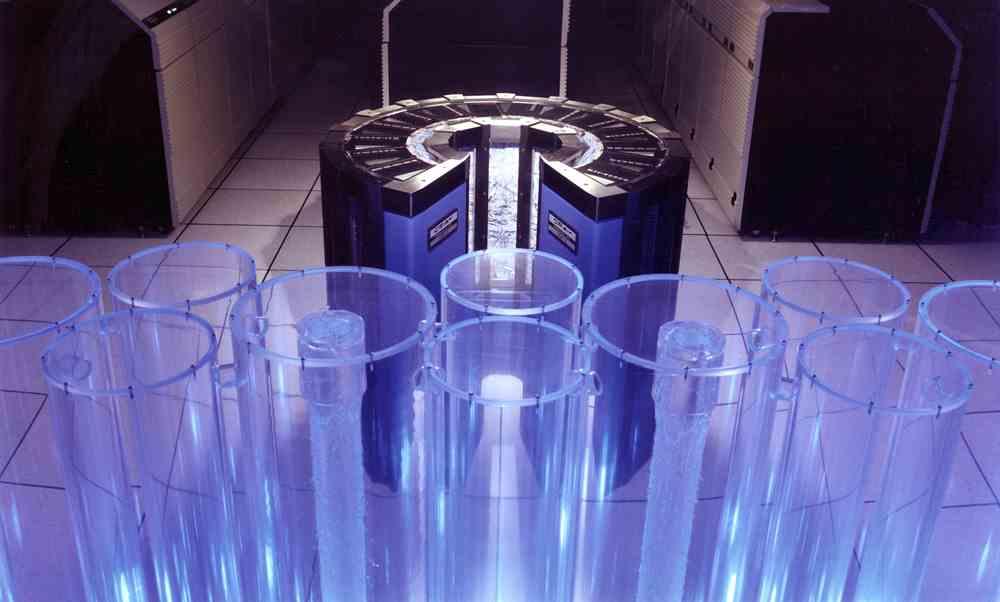

Cray-2, NASA’s supercomputer

We owe the “NASA supercomputer” meme to Cray-2, created for the famous special agency and deployed in 1985, was both a swan song for Cray Research and the last of its supercomputers. Cray Research increased the CPU count of this first supercomputer with 8 CPU cores. Which also added a series of additional processors, which were responsible for handling access to memory, storage and I / O interfaces. Its computing power? 1.9 GFLOPS of computing power, so it wasn’t such an impressive leap, but its biggest particularity is the fact that it was liquid-cooled.

However, the end of the cold war was approaching and for the design of its supercomputers, Cray Research depended on the enormous capital in defense of the United States Army, in addition to that its CPUs were of enormous size and were impossible to be transferred to other markets. In other words, when the Iron Curtain fell and the interest in having a defense supercomputer waned, Cray lost its biggest customers that allowed it not only to survive, but to develop its new processors.

ASCI Red, supercomputers come to teraFLOP

It soon became clear that the design of not-so-complex processors was necessary to create a supercomputer, as no one was willing to spend the huge amounts of capital any longer after the cold war was over. So a paradigm shift was necessary and this came under the concept of using much simpler processors, such as those used in PCs and servers for the creation of supercomputers.

If we talk about CPU for PC, one of the most important was Intel’s Pentium Pro, since it introduced concepts such as the use of a second-level cache, the ability to use more than one processor, and out-of-order execution. Well, the company founded by Gordon Moore began the design of the ASIC Red, which was a beast for the time composed of neither more nor less than 76 Intel Pentium Pro CPUs accompanied by 1212 GB of RAM and 9298 processors for computer tasks. support.

The first supercomputer with the ability to reach 1 TFLOPS of power in the history of computing, however, and unlike Seymour Cray’s designs, the Pentium Pro is not a CPU that will stand out for having a SIMD unit, in fact, it lacked such a unit, but it stands out for being the first supercomputer in history to use a PC CPU for its construction.

IBM Blue Gene and the NEC Earth Simulator, mythical supercomputers

Once the power of the teraflop of power was reached, the next challenge was to get to the PetaFLOP with a computer, that is, 1000 times the computing power of the ASCI Red designed by Intel and there was room to achieve it. Being one of the companies that faced this challenge, IBM decided to use its PowerPC processors for the creation of its Blue Gene, a project that began in 1999 and did not finish until November 2004.

The first BlueGene, known as BlueGene / L, was made up of neither more nor less than 131072 CPUs, an astronomical figure that allowed it to reach 70.72 TFLOPS of power, something that a NVIDIA RTX 3090 does not reach by itself. Figure with which it managed to surpass the Earth Simulator of NEC, which was the most powerful supercomputer at that time.

The Earth Simulator was a joint development between NEC and the government of Japan with a capacity of almost 40 TFLOPS of power that was designed with a view to weather prediction. It differed from the Blue Gene by the fact that it was based like the Cray in processors with wide SIMD units while the IBM design used PowerPC CPUs as a base without this type of units inside. So the IBM design was more similar to the ASCI Red while the Earth Simulator was to the early Cray.

The IBM Roadrunner, finally the power PetaFLOP is reached

In 2001, IBM began development of a processor known as the Cell Broadband Engine, which became famous for being the main CPU of the PlayStation 3 console, but was also used for the creation of the IBM Roadrunner, a supercomputer that combined a CPU. AMD Opteron with a variant of the CBEA used in PlayStation 3, which made use of vector processors or SIMD called SPE inside.

The IBM Roadrunner consisted of 6,912 AMD Opteron dual-core CPUs and 12,960 Cell Broadband Engine processors, a much lower figure than the Blue Gene, but which did not prevent it from breaking the 1 PetaFLOP power barrier. Although the CBEA is a CPU by itself, in the Roadrunner it was used as a support processor to speed up the parallel parts of the code and was a precursor to the use of GPUs for these tasks in a supercomputer.

The first supercomputer to use GPUs

Nowadays, most supercomputers are designed with a CPU and GPU inside, but as you may well know, this was not always the case and it was not until 2008 that the first supercomputer appeared that made use of a GPU to perform its calculations, although it did. on a fairly modest system compared to the IBM Roadrunner.

The TSUBAME was created by the Tokyo Institute of Technology and made use of the first generation of NVIDIA Tesla to reach 170 TFLOPS, thus beating the Blue Gene and the Earth Simulator. Graphics processors had begun to have the ability to run increasingly complex algorithms since the implementation of shader units, and with the NVIDIA G80 architecture they were used to accelerate scientific computing algorithms.

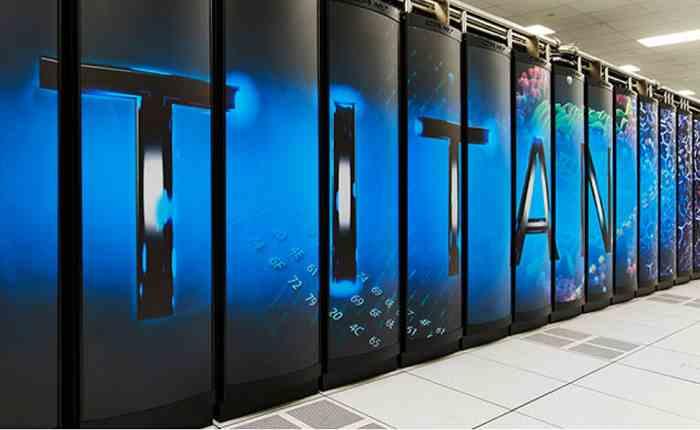

However, a GPU-based supercomputer didn’t get the top spot until 2013, at which point a resurrected Cray launched its Titan, made up of the combination of AMD Opteron CPUs and NVIDIA Tesla GPUs that existed at the time. The computing power obtained? 10 PetaFLOPS. So we are talking about a jump of more than 50 times in power in just 5 years, demonstrating the enormous efficiency of GPUs in computing power.

The era of ExaFLOP, the new barrier about to be overcome

Today we are in the era of ExaFLOP and the goal is to achieve 1 million TeraFLOPS of power with supercomputers. Something that has brought with it an obsession that is the reduction of consumption in communication, this has led to the development of advanced packaging and intercommunication systems to be able to reach that figure without shooting energy consumption through the roof.

We will see the first supercomputers under this new paradigm from 2022, with the El Capitan supercomputer built with technology only from AMD and on the other hand Aurora with CPU and GPU technology from Intel. Both represent a kind of cold war between both companies and their development has influenced and will influence future architectures and designs that we will have in the future in our PCs.