In the market we find a large number of CPUs of all families, types and sizes, but if there is something in common from the less powerful smartphone to the most advanced military supercomputer, it is that we are in the era of multi-core CPUs . But what is preventing designs from having a greater number of cores? What influences this limit?

It’s been more than 15 years since the first multi-core PC CPUs were released and a question that often comes to mind is: how far can a CPU scale in terms of number of cores? We are going to try to answer this question, keep reading and you will understand the reasons why processors are designed with a limit of cores in mind.

What limits the number of cores in a CPU?

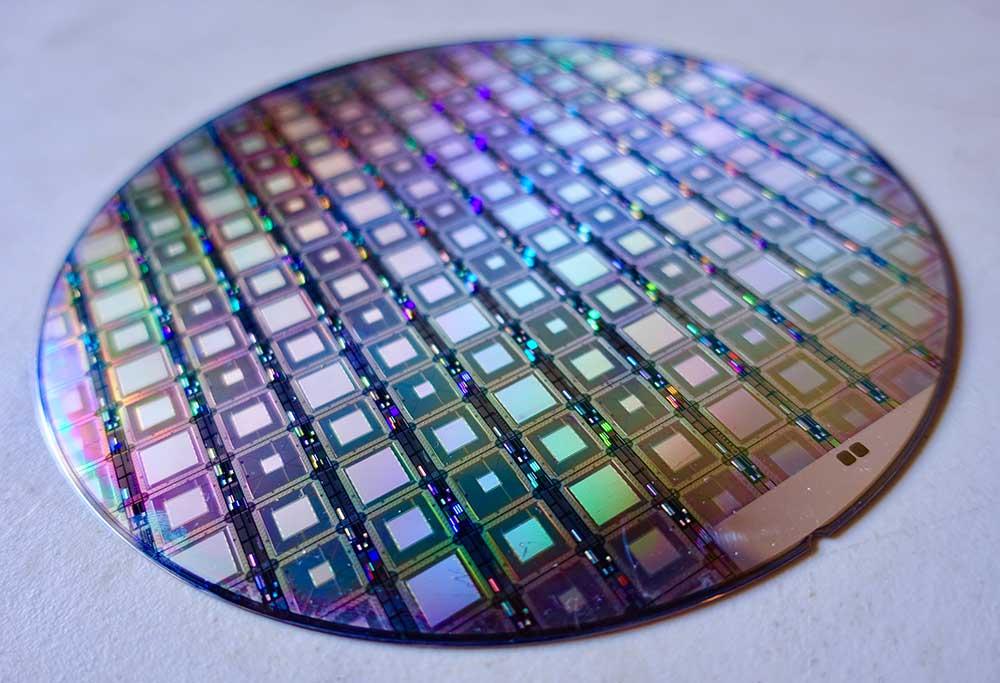

The era of multi-core CPUs came when Dennard’s scaling , a law in microprocessor design that predicted increasing clock speeds, was completely broken as soon as 6 was hit. nm, forcing CPU designers to go the path of multi-core configurations instead of having a single core alone.

So what are the reasons why the number of cores in a processor is limited? Let’s see it.

Physical limits to the inclusion of a greater number of cores

The first obvious limit is the size of the chip and the number of transistors that can be placed in said space, which are directly related to the manufacturing node that is used, while the second limit is the energy consumption that we have available for said processor . On the other hand, not all architectures have the same energy efficiency, so depending on the purpose for which a CPU has been built, we can find some very complex with few cores or much simpler with a large number of cores.

Depending on the use that is going to be given to the CPU, a certain number of cores will make more or less sense; For example, if we are going to set up a local data center that will be used by several users, it makes sense at least to allocate one core per user or per virtualized operating system.

Communication is also a limit to the cores in a CPU

Putting a large number of CPUs on a chip seems easy, but we need all of them to be able to communicate with each other and with elements outside the processor.

In the first place, we must give a first level cache to each of the cores and then a last level shared cache, we can integrate intermediate level caches but the last level will always be important for the communication of the different cores without having to go to memory, so if this last level cache is not present it would add additional latency when one core has to communicate with another.

But a higher number of cores means that all the cabling and communication interfaces also grow exponentially and not only between the processors within the core but between them with the last level cache, with the peripherals and even with the RAM memory.

As the CPU grows in cores, we find that the communication infrastructure inside the processor grows, occupying space and also consumes energy.

Parallelization in software and its relationship with the core limit

Not all processes running on a CPU can do it in parallel, as they all have a limit on how we can subdivide them into more tasks. In some specific cases it is impossible and we can find parts of certain processes that cannot be parallelized and therefore make them work with several cores.

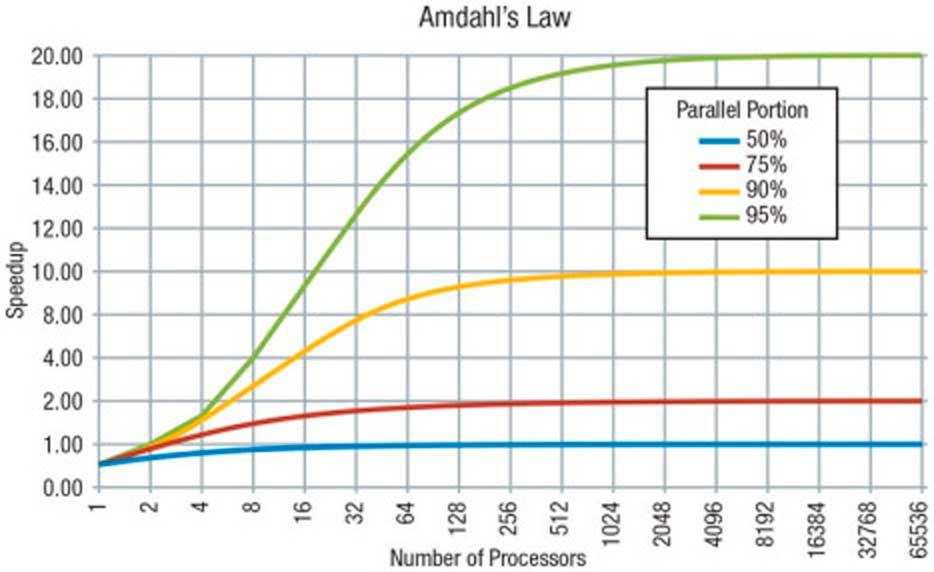

The paradox is that as we increase the number of cores we will find that the parts of the code that can work in parallel and are optimized to work with several cores will increase their performance and that part of the code will be solved in less time, but the counterpart is that the serial part does not increase its performance with the number of cores but we need more powerful cores, according to Amdahl’s Law.

It is for all this that the path that is usually done is to improve architectures or create new ones with better performance in a single core, since not all processes that run on a CPU increase their performance with a greater number of nuclei. In some environments, however, the number of cores used in the system is directly related to the way in which the software is parallelizable.

Accelerators or domain-specific processors

A specific domain accelerator or processor is a piece of hardware that performs a specific task, this type of processors are based on three premises:

- It occupies a very small portion compared to the core of a CPU or another type of complex processor.

- It does its job with a miniscule portion of power compared to a CPU or other complex processor.

- It can work in parallel to the main processor they are supporting, thereby freeing up some of the work and time of the processor they are throttling.

The normal thing is that, for example, if we have the task of decompressing a file in ZIP format or decoding an image in JPEG to display it on the screen, we assign these tasks to a CPU core, but with the implementation of accelerators or processors of Specific domain, this need to use the CPU cores decreases and therefore configurations with a large number of cores are not necessary, since these accelerators unload the work to the CPU of certain specific and repetitive tasks that they perform on a daily basis.

With all this you have listed the reasons why the number of cores has “stagnated”, although obviously it will depend on the use made by the CPU software, which will make the number of cores that the processors have in our PCs evolve. Do not forget that we have gone from 4-core configurations and in a short time the 8-core ones will be completely standard, so the limit of cores will increase over time.

All these are the reasons why CPU manufacturers do not add huge amounts of cores in the current CPUs that are on the market, which on the other hand means that there is not a limit of cores in the processors, but that there is reasons why its implementation is slower.