During his presentation in 2019 about the future of Intel Xe architecture, Raja Koduri made mention of a type of memory that Intel has christened “Rambo Cache” and that is one of the key pieces for Intel Xe. But what exactly is the Rambo Cache and what is its use? We explain it to you.

How do we make huge numbers of GPU chiplets communicate efficiently with each other? We need a memory to do the intercom work and that’s where the Rambo Cache comes in. We explain how it works and what its function is.

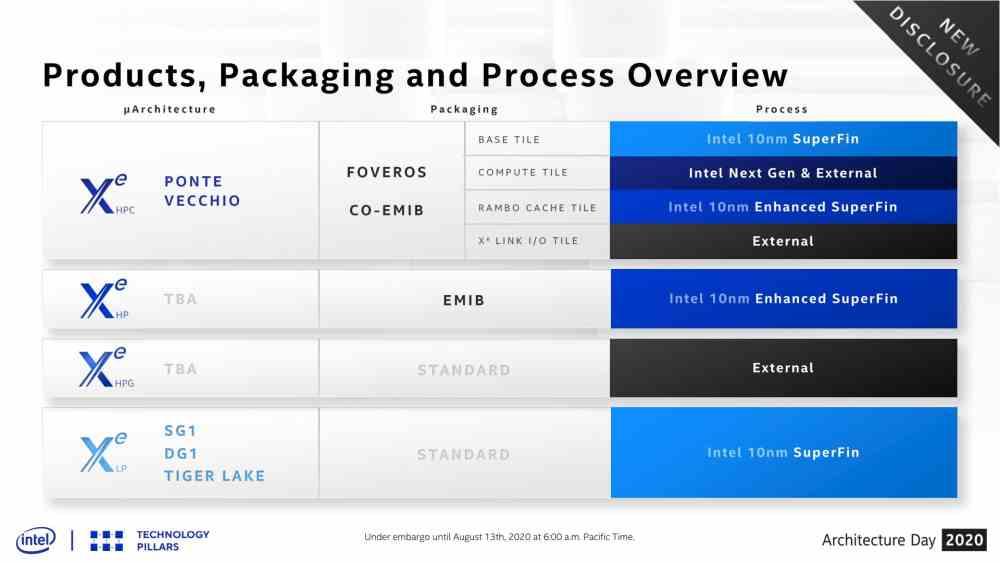

Rambo Cache as difference between Xe-HP and Xe-HPC

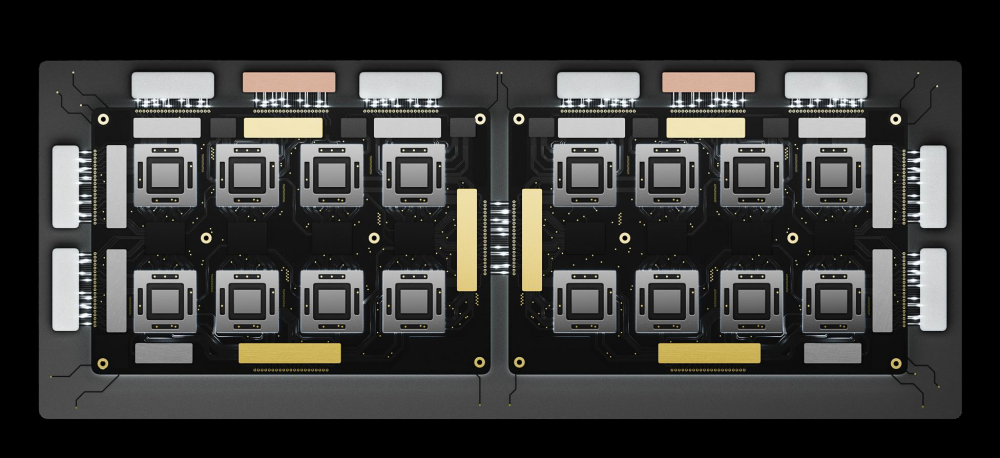

As can be seen in the Intel slide, the Rambo Cache is itself a chip that includes a memory inside, which will be used exclusively in the Intel Xe-HPC for communication between the different tiles / chiplets. . While the Intel Xe-HP supports up to 4 different tiles, the Intel Xe-HPC handles a much higher amount of data, which makes this additional memory chip necessary as a communication bridge for extremely complex configurations in terms of the amount of data. GPU chiplets, or tiles as Intel calls them.

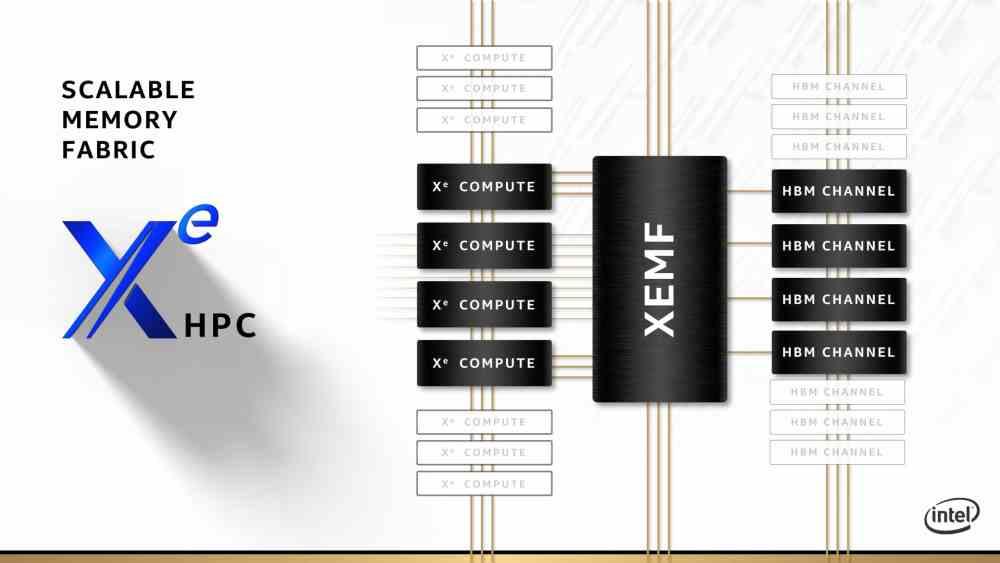

The Rambo Cache will be placed between multiple Intel Xe-HPC Compute Tiles to facilitate communication between them. Compute Tiles are nothing more than Intel Xe GPUs but specialized for high-performance computing, so the classic fixed function units in GPUs will not be in the Intel Xe-HPC because they are not used in high-performance computing.

However, the Rambo Cache is going to be unprecedented in the rest of the Intel Xe, especially those that are not going to be based on several chips such as the Intel Xe-LP and Intel Xe-HPG. In the specific case of the Intel Xe-HP, it seems that with 4 chiplets the Rambo Cache is not necessary due to the fact that Interposer provides enough bandwidth to communicate the different chiplets mounted on top of it.

The goal is to reach the ExaFLOP

We know that the limit regarding the number of chiplets on an interposer is 4 GPUs, but from a higher number it is when the interconnection based on an EMIB interposer no longer gives enough bandwidth for communication, which makes that an element is necessary that unites the access to the memory and that is where the Rambo Cache would come in, since it would allow Intel to make a more complex GPU than the maximum 4 chiplets that it can build with the EMIB.

The objective? Being able to create a hardware that in a combined way can reach 1 PetaFLOP of violence or in other words 1000 TFLOPS. A performance much higher than the GPUs that we have in PC, but we are not talking about a GPU for PC but about a hardware designed for super-computers, with the aim of reaching the ExaFLOP milestone, which is 1000 PetaFLOPS and therefore 1 million TeraFLOPS.

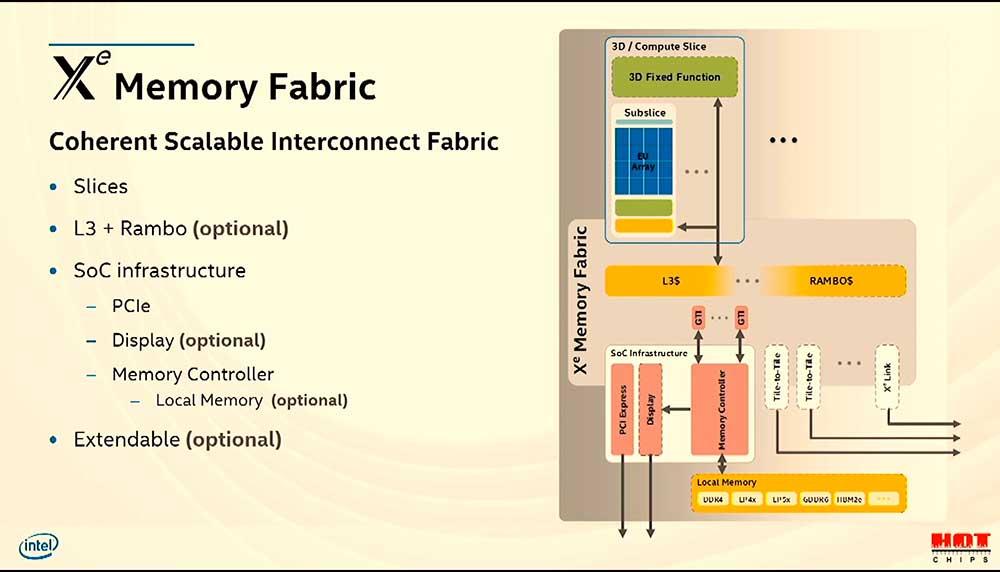

The great concern of hardware architects to achieve this is energy consumption, especially in data transfer, more calculations more data and more data moves more energy. That is why it is important to keep the data as close to the processors as possible and this is where the Rambo Cache comes in.

The Rambo Cache as a top-level cache

When we have several cores, whether we are talking about a CPU or a GPU, and we want all of them to access the same memory well at both the addressing and physical level, then a last-level cache is needed. Its “geographic” location within the GPU is just before the memory controller but after each kernel’s private caches.

GPUs today have at least two levels of cache, the first level is deprived of shader units and is usually connected to texture units. The second level instead is shared by all the elements of a GPU. In this case, they are your interconnection path to communicate, access the most recent data and all this in order not to saturate the VRAM controller with requests to it.

But there is an additional level, when we have several complete GPUs interconnected with each other under the same memory, then an additional level of cache is needed that groups the accesses to all the memories. Intel’s Rambo Cache being Intel’s solution to unify the access of all the GPUs that together make up Ponte Vecchio.