Have you ever wondered where the images you see on the screen of your PC, console or PostPC devices are stored? Well, in image buffers, but what is their nature and what does it consist of? How does it affect the performance of our PCs, what forms of rendering a scene exist and what are their differences?

A GPU generates a huge amount of information per second, in the form of multiple images that pass quickly on our retina from the television, the size of these images and the information that is stored and processed is enormous. Image buffers due to being stored in VRAM are one of the reasons why high bandwidth memory is required.

What is the image buffer?

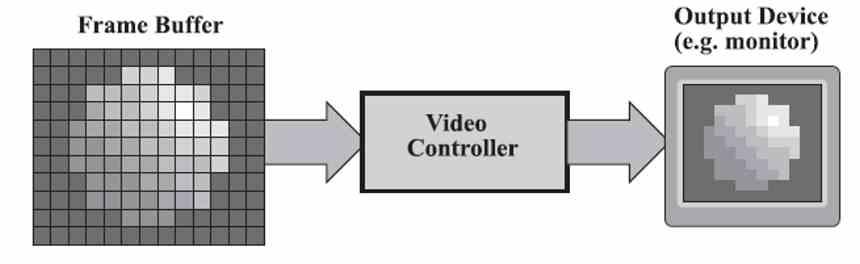

The image buffer is a part of the VRAM where the information of each pixel on the screen of the next frame is stored, this buffer is stored in the VRAM and is generated by the GPU every x milliseconds.

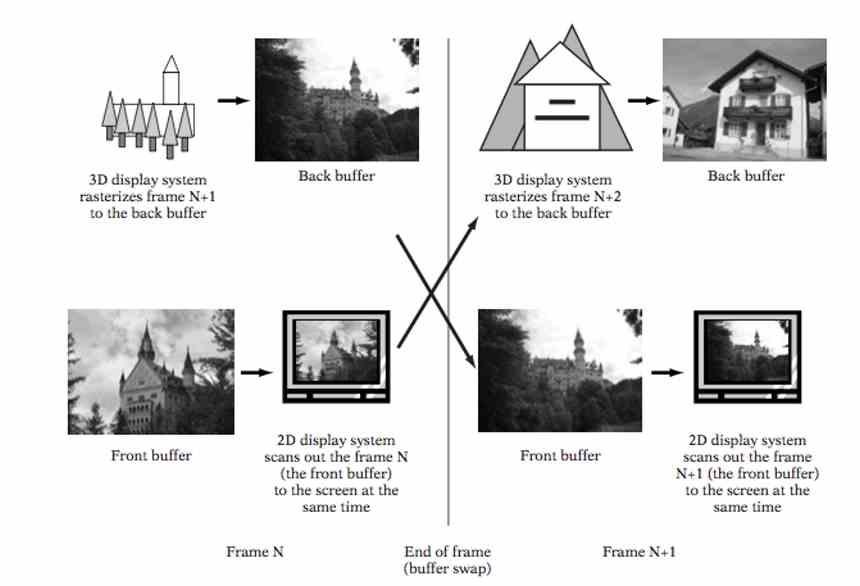

Current GPUs work with at least two image buffers, in the first one the GPU writes the next frame that will be seen on the screen, in the second is the previous frame already generated, which is sent to the screen.

Backbuffer or rear buffer is called the one that is generated by the GPU and Frontbuffer or front buffer is the one that is read by the display driver and sent to the video output. While the front buffer stores for each pixel the value of the red, green and blue components, in the rear buffer it can store a fourth component, alpha, which stores the transparency or semi-transparency value of a pixel.

What is the display driver?

The display driver is a piece of hardware found in the GPU, it is in charge of reading the image buffer in the VRAM and converting the data into a signal that the video output, whatever the type, can understand.

In systems like NVIDIA G-SYNC, AMD FreeSync / Adaptive Sync, and the like, the display controller not only sends data to the monitor or television, but also controls when each frame begins and ends.

Image Buffers and Traditional 3D Rendering

Although our screens are in 2D, for more than twenty years 3D scenes have been rendered in real time on them. To do this, it is necessary to divide the back buffer into two differentiated buffers:

- Color Buffer: Where the value of the color and alpha components of each pixel is noted.

- Depth buffer: Where the depth value of each pixel is pointed.

When rendering a 3D scene we can find that several pixels have different values in the depth buffer but are in the same place in the other two dimensions, this is where those extra pixels have to be discarded since they are not visible By the viewer, this discard is done by comparing the position of each pixel in the depth buffer, discarding with them those furthest from the camera.

The depth buffer

The depth buffer or better known as Z-Buffer stores the distance of each pixel of a 3D scene with respect to the point of view or camera, this can be generated in one of these two moments.

- After the raster stage and before the texturing stage.

- After the texturing stage.

Since the most computationally heavy stage is that of the Pixel / Fragment Shader, the fact of generating the Z-Buffer just after texturing the scene involves calculating the color value and therefore the Pixel or Fragment Shader of hundreds of thousands and even million pixels not visible.

The counterpart of doing it before texturing is that by not having the values of color and transparency, alpha channel, we can find that one pixel after another transparent is discarded beforehand, making it not visible in the final scene.

To avoid this, developers usually render the scene in two parts, the first does not render the objects behind a transparent or semi-transparent object, while the second only renders those objects.

Image buffering and lazy rendering

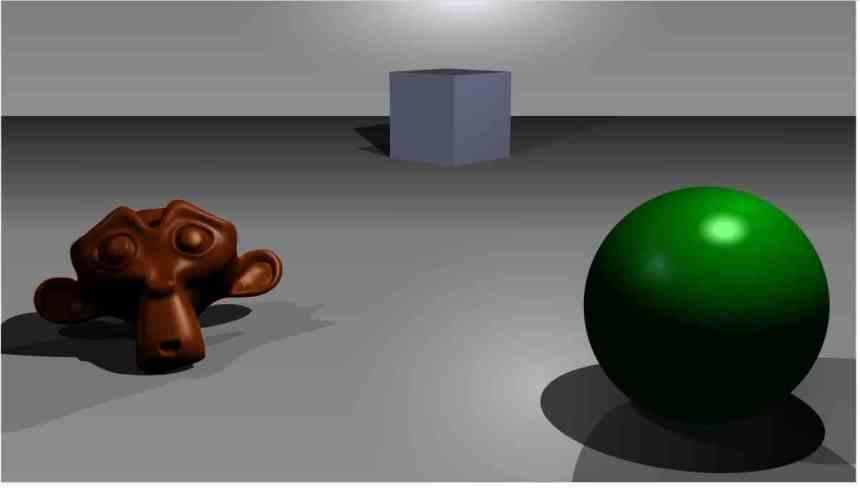

One of the novelties that were seen in the late 2000s when rendering graphics was deferred rendering or Deferred Rendering, which consisted of first rendering the scene generating a series of additional buffers called G-Buffer , and then in a next step calculate the lighting of the scene.

The fact of needing several image buffers multiplies the demands on memory and therefore requires a larger amount of memory for storage, as well as a fill rate and therefore a higher bandwidth.

But, the lazy rendering was done to correct one of the performance problems of classic rasterization in which each change in value in a pixel, whether due to luminance or chrominance, involves a rewrite in the image buffer, which is translates into a huge amount of data to be transmitted in the VRAM

Thanks to lazy rendering, the cost of the number of lights in the scene goes from being exponential to logarithmic, thus reducing the computational cost when rendering scenes with multiple light sources.

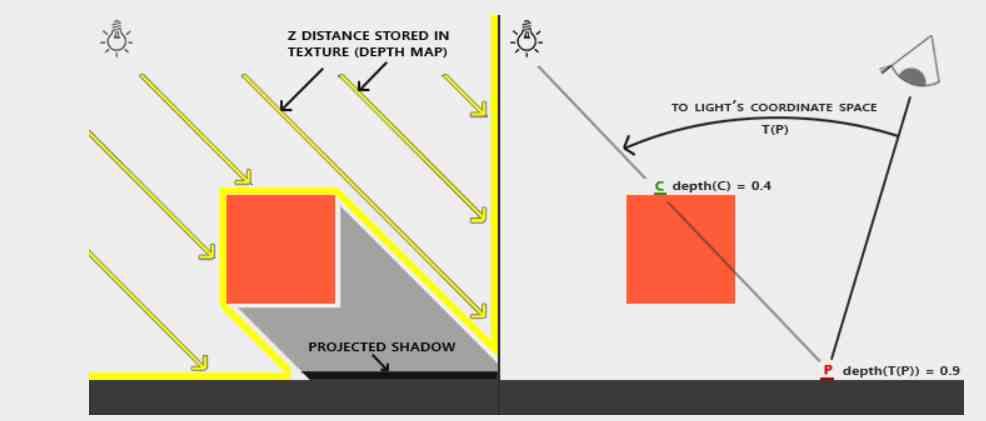

Image buffers as shadow map

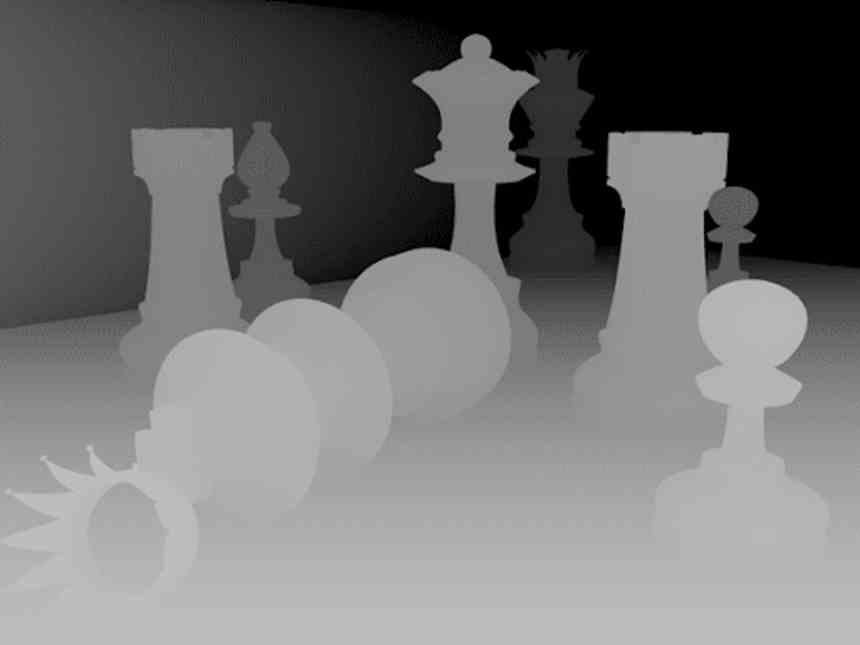

Because rasterization is not very good at calculating indirect lighting, tricks have to be found to generate shadow maps in them.

The simplest method is to render the scene taking the light or shadow source as if it were the camera, but instead of rendering a complete image, only the depth buffer is generated, which will be used later as a shadow map.

This system will disappear in the coming years due to the rise of Ray Tracing, which allows the creation of shadows in real time in a much more precise way, requiring less computational power and without the need to generate a huge shadow map in VRAM.