Domain Specific Accelerators or Domain Specific Accelerators are a type of unit that is located by its complexity below a CPU, but has the ability to perform certain tasks consuming less power and being faster. But how do they work, what defines them, and why are they the future of hardware?

As time goes by, programs need to go faster and faster, but at the same time CPUs and GPUs have become behemoths of complexity where it is very difficult to increase performance in a traditional way. The future solution for this problem? Domain Specific Accelerators

First of all, the throttle concept

Since the dawn of computing, support chips have been necessary to speed up certain techniques, originally these chips freed the CPU from performing a repetitive and recursive task. The clearest example was the graphics systems that made the CPU not have to waste most of the time drawing on the screen.

An accelerator is a support chip that goes further, because it not only frees the CPU from doing that task, but also speeds it up. That is, the task is done in a portion of the time of what the CPU would take. Which means that it is accelerated and has an impact on everything going faster. Hence the name accelerator.

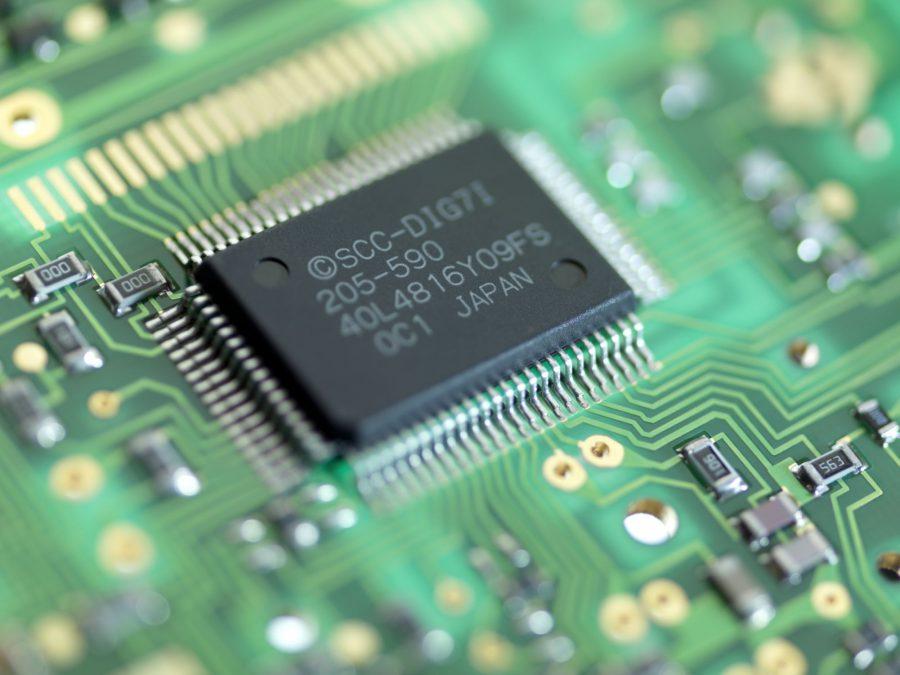

Accelerators there are many types and designs, any type of hardware can be an accelerator: a microcontroller, an FPGA, a combinational or sequential circuit, etc. In recent years, a type of accelerator has appeared that will dominate the hardware in the following years, the Domain Specific Accelerators.

Domain Specific Accelerators, general definition

In hardware, we have been using accelerators for a long time for different types of jobs and specific applications and therefore for a specific domain in particular. Today these domains can be graphics, Deep Learning, bioinformatics, voice processing and images in real time. There are many specific domains in which a Domain Specific Accelerator can solve the problem in a better way than a CPU, that is, in less time and consuming less.

The first thing that will come to mind is the question: is a GPU a Domain Specific Accelerator? No, it is not. DSAs take care of very specific tasks in particular, so a GPU is going to have several of these units. To make it more understandable, it must be taken into account that every task can be divided into several smaller ones that can be accelerated independently with this type of processors.

However, Domain Specific Accelerators are different from other options that exist on the market, since they exploit in their design a series of characteristics that place them between general purpose processors and conventional accelerators. In other words, they do not reach the complexity of a CPU, but they are much more complex than the classic solutions, especially those based on a fixed function.

Specific domain, specific ISA

The first thing we have to bear in mind is that a Domain Specific Accelerator is not a CPU, although it also executes a program, its design is optimized for a specific solution and not one in general, for this a totally ISA is created around the DSA unit exclusive whose instructions, registers and data types used are thought to solve in a short time certain instructions that a CPU would take many cycles to do.

The CPUs in their ISAs build instructions today from microinstructions that share a common data path through the instruction cycle. This means that due to the complexity of the instruction set, a complex instruction takes many cycles to complete. In a DSA we can create instruction loops and specific data paths for certain instructions that run faster. We can even create units in parallel that execute just that instruction recursively.

But the biggest advantage of this is that it allows us for certain applications to get rid of instructions that a general purpose unit that for our specific application is useless. And that in recent years they have ended up transforming the sets of registers and instructions of CPUs and GPUs into mastodons that occupy a large space.

Domain Specific Accelerators and memory access

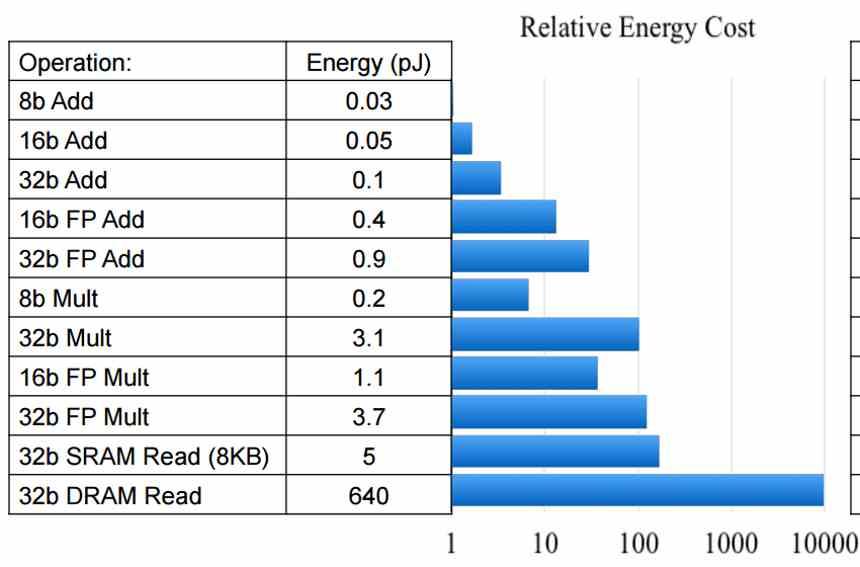

Another improvement of the DSA has to do with memory, since like a microcontroller they use memory within the accelerator itself. Which is important, since the physical distance in which the memory is located influences the energy cost of the instructions.

Its memory configuration is the main advantage of accelerators, since each executed instruction consumes much less power than in a CPU, in addition it avoids the problem of contention with memory. A DSA does not make use of the system RAM to perform its calculations, so it can work in parallel all the time.

In addition, because of the way they work, we can place them in an SoC or a similar structure and have the CPU communicate with them directly, all without having to go through RAM to get the data.

Hardware and Software go hand in hand in DSAs

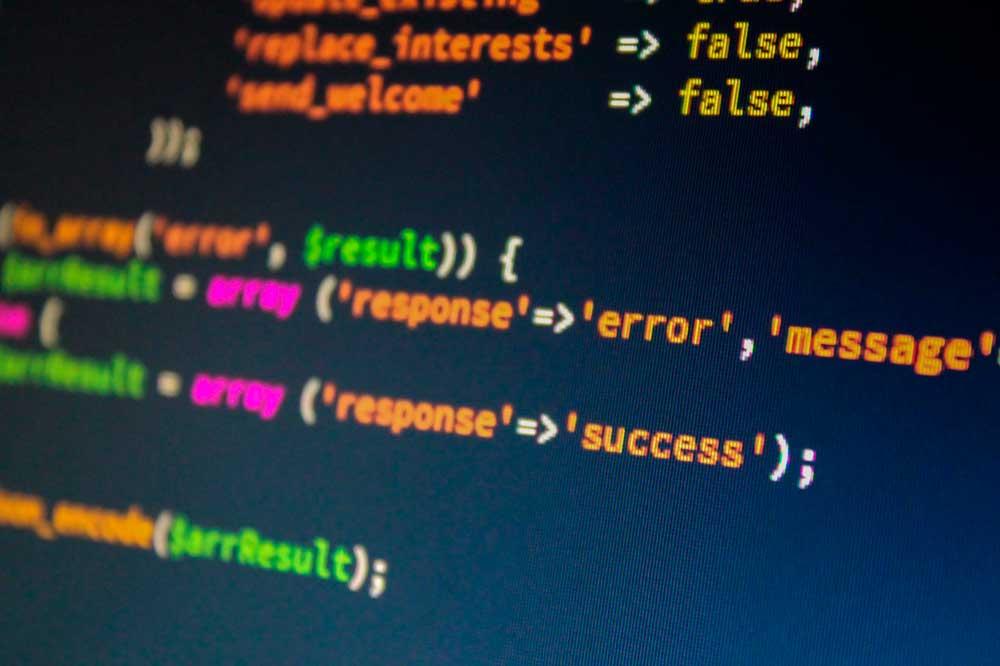

Hardware design is not normally done for specific software, rather it is the software that is adapted to take advantage of the hardware. This is done through the use of specialized APIs at the software level where the software interacts with an abstraction of the hardware so that a program that is the driver is the one that performs the translation between the abstraction and the hardware.

In Domain Specific Accelerators the idea is that they can execute a program that runs on them as if it were a CPU, but given that they have a specialized set of instructions for a specific problem with the aim that the programs run faster under a DSA that under a CPU due to its specialized ISA and architecture.

Many of the hardware designs in the future will be DSAs for specialized problems. Which will be created locally in each company and institution to accelerate specific parts of one or more programs that have been developed. Its implementation will be through the creation of unique chips, its implementation in SoCs and even in FPGAs through languages such as Verilog or VHDL.

So it is to completely reverse the relationship between hardware and software, since we go from designing software to take advantage of a specific hardware to designing hardware for specific software solutions.