One of the most technical and ambiguous specifications that you will find buying a monitor’s color depth; 8-bit is normal today, with 10-bit becoming more popular and 12-bit at the high end of the market, but what is color depth and how does it influence image quality? ? In this article we are going to try to get you out of your doubts about it.

Color depth has always been important, but with the rise of 4K resolution and HDR, the ability to display gradations and color matrices more accurately has become even more essential. Of course, the higher the color bit depth, you can imagine that the better the image quality is, but we will explain it in depth to understand it better.

What is color depth on a monitor?

Earlier we mentioned that with the rise of 4K resolution and HDR this parameter has become more important; when 1080p was dominant it was already important, but this distinction carries more weight as images become denser (more pixels with increasing resolution) and more loaded with metadata. The color depth of a screen really means how much image information it accurately displays on the panel or screen.

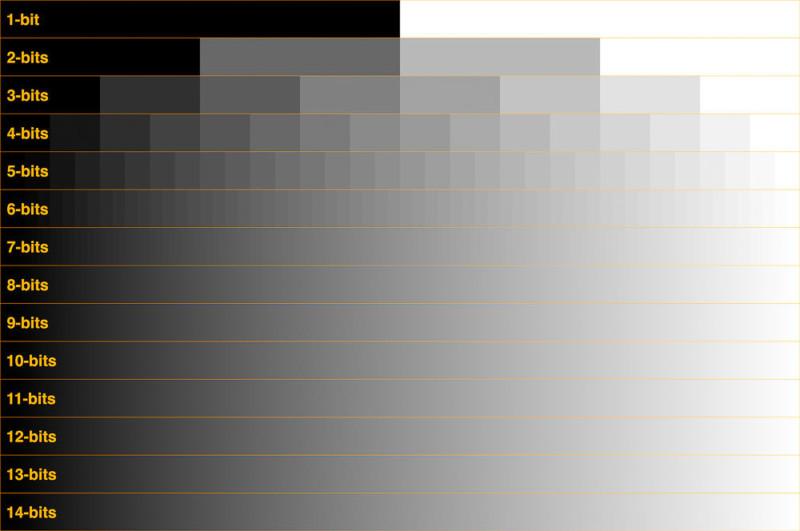

We just mentioned metadata , which generally refers to additional information beyond image basics like resolution and frame rate; HDR is included in the metadata, and the more information a panel displays, the better and more accurate the image will be. You can see it clearly in the following image where we can see a gradient from black to white on a scale from 1 to 14 bits.

Bit depth and the effect the specification has on color rendering have particular appeal to enthusiastic users. Color fidelity is highly valued by gamers, movie and TV buffs, photographers and video professionals and they know that every part counts, so this parameter is especially important to them because it gives us an idea of precisely that. , how faithful is the representation of color on the screen.

The math of bit depth

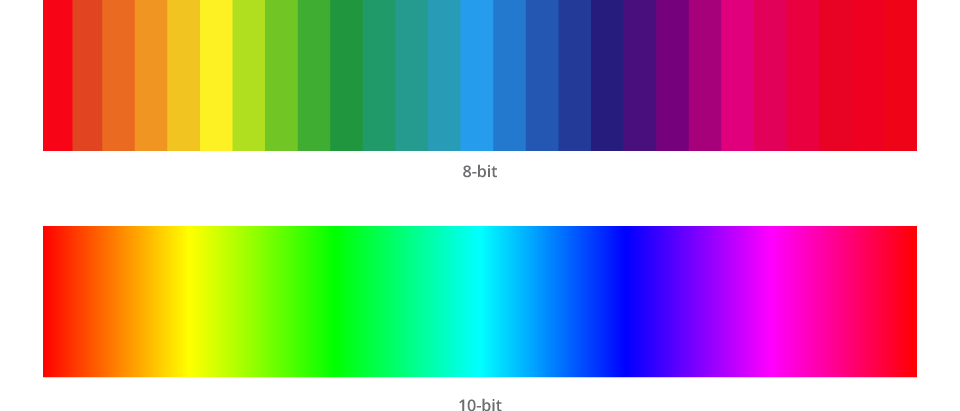

Finding out the color bit depth becomes mathematical very quickly, but we will try to save you the boring calculations; Since modern display panels use pixels controlled by digital processors, each pixel represents one bit of data and each bit has a value of zero or a value for each primary color (red, green and blue, RGB). Therefore, an 8-bit panel has 2 raised to 8 values per color, that is, 256 gradations or versions of red, blue and green, which are calculated as 256 x 256 x 256 to bring a total of 16.7 millions of possible colors.

For 10-bit panels, each pixel displays up to 1024 versions of each primary color, or in other words 1024 raised to 3 or 1.07 billion possible colors. Thus, a 10-bit panel has the ability to render images with exponentially greater color precision than an 8-bit one. A 12-bit panel goes further, with 4096 possible versions of each primary color per pixel, or 4096 x 4096 x 4096 for a total of 68.7 billion possible colors.

8 bit, 10 bit, 12 bit, what is the real difference?

Actually the difference is quite big; While 8-bit panels do a good job of displaying realistic images, they are also considered the bare minimum in terms of modern input sources. The vast majority of 4K and 8K content is created with a depth of 10-bit or even more, and that means that an 8-bit panel will not be able to display the content as intended by its creators. An 8-bit panel receiving 10-bit or higher content has to “squash” the details and color gradations to fit.

Although for normal users the difference may seem acceptable, if you really care about the content you are viewing then the difference is noticeable. An 8-bit panel has much less range than a 10-bit one and cannot display the rich variety of colors, resulting in a duller, fainter, and clearer image overall. The lack of variety is most typically manifested in dark and light areas; for example, on an 8-bit screen it may only appear as a bright spot with very clear bands of light emanating from it, while a 10-bit panel will show it as a gradually bright object with no obvious bands.

A quick historical perspective can help: 8-bit color depth was designed for VGA displays decades ago and only goes as far as the RGB color gamut. As such, 8-bit monitors can’t handle wider color spaces like Adobe RGB or DCI-P3, and they can’t display HDR content properly (you need 10-bit minimum for this).

Is more color depth better for gaming?

Really, of course it is better, although it is not necessary. As we just said, 8-bit is very 80s and VGA screens, an era where 4K resolution and HDR were not yet even in the dreams of the engineers who developed the technology. Now, in the era of 4K and HDR content, a 10-bit display certainly brings huge benefits to the modern content viewing experience.

Contemporary PC and console games are rendered at 10-bit minimum and HDR is becoming more and more universal. Of course, they will work well on a low-cost 8-bit panel, but you will be missing a lot of details as we discussed in the previous section. Even the most expensive 8-bit monitors and TVs that support HDR have limitations: for example, on Xbox One X a blurred 8-bit screen (simulating 10-bit as best it can) can only work with basic HDR10, while that the most suitable screens open the options of Dolby Vision and HDR10 +.

In that sense, games are no different from movies, streaming, photographing, or video editing. For all this, the source content continues to increase in detail and quality, and obviously the screen that is used must keep up with the content and not get stuck in the past, or you will miss a lot of details. That means that today 10 bits or more is what we should look for for gaming.