The battle in the graphics card market between AMD and NVIDIA has been going on for several years now, but AMD has not always made graphics cards and its origin is in a company called ATI Technologies, which is the origin of the Radeon Technology Group. Join us on this journey where we are going to tell you the story of AMD’s GPU division.

AMD’s GPU division was born in 1985 as Array Technology Incorporated, the company specialized from the beginning in the creation of graphics chips to be used in graphics cards for PCs, a market that was born at that time with the aim of providing solutions more cheap to IBM standards or to propose your own.

ATI Graphics Solution Rev 3

At the dawn of the PC you could use monitors based on televisions, which lacked the radio receiver to view television content, or higher resolution monochrome screen. In offices around the world, the second type was more widely used since it allowed working with 80 characters per line of text against the 40 characters of the monitor derived from the television.

But an alternative to the IBM option called Hercules appeared, which also allowed up to 80 columns, but of 9 characters each. This also allowed a monochrome mode with an image buffer of 720 x 350 pixels, but required a special graphics card, which meant losing compatibility with the IBM MDA card.

The ATI Graphics Solution Rev 3 became very famous when it appeared on the market in 1985, integrating a CGA graphics card and a Hercules into a single unit. Due to this versatility it became very famous at the time, since it avoided the use of several different types of graphics card in a PC depending on the type of monitor.

ATI Wonder graphics cards

ATI began to make a name for itself from its EGA Wonder, a graphics card under the EGA standard that combined the Graphics Solution Rev 3 chipset with an EGA chip from Chips Technologies, so this card could handle various graphics standards without having To have multiple adapters, which gave enormous compatibility in the face of the huge confusion of PC graphics standards.

The card was succeeded by the ATI VGA Wonder, which included a VGA chipset developed by ATI itself and maintained compatibility with the rest of previous standards also on a single card.

ATI Mach graphics cards

In 1990 ATI continued to make clones of IBM standards, with the ATI Mach 8 they made a clone of the IBM 8514 / A graphics chip, which was the beginning of the XGA standard, this standard allowed a screen resolution of 1024 × 768 pixels, with a 256-color palette, or 640×480 with 16 bits per pixel (65,536 colors) and tried to copy some of the graphics features of the Commodore AMIGA graphics chip.

One of the advantages of the AMIGA over the PC was the so-called Blitter, a hardware unit that allowed data to be copied from one part of memory to another, manipulating the data on the fly through Boolean operations. This allowed the AMIGA with a less powerful processor to have powerful drawing applications.

The particularity of this standard is that it was born as a parallel extension to VGA for professional monitors. Its biggest advantage? It was the addition of Blitter-style graphical operations like those found in the Commodore Amiga. So visual operations like drawing lines, copying blocks or filling the color of a shape ended up being possible without having to use CPU cycles for it.

Once the pre-VGA graphics standards were completely out of date, ATI released the ATI Mach 32, which included a VGA core, thus unifying the ATI Wonder and ATI Mach series into one, although ATI continued to use the ATI Wonder name for certain products. outside of its main range of graphics cards, such as video decoders, which were used during that era to decode video files on CDs.

Starting with the ATI MACH64, they unified the VGA chipset and the MACH into a single graphics processor.

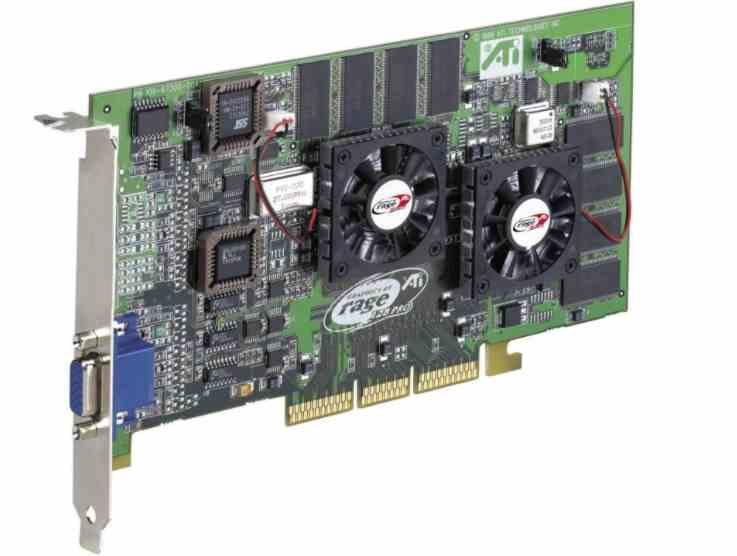

ATI RAGE graphics cards

The enormous success of the Voodoo Graphics by 3Dfx and the appearance of games based on real-time 3D graphics caused companies like ATI to catch up in order not to disappear from the graphics card market, their answer was the ATI Rage.

The problem with most graphics cards is that CPUs, since the standardization of the Intel Pentium in homes, rendered 3D game scenes much faster than graphics cards were at that time. then, which had been designed to work with the 486 and therefore were going to slipstream.

ATI’s solution was to modify its ATI MACH64 chipset to make a series of changes that would make its range of graphics cards competitive for a future of 3D gaming with real-time graphics.

- The ability to process textures and filter them was added, for that a 4 KB texture cache was added.

- A Triangle Setup or rasterization unit was added, to relieve the CPU of such a heavy load.

However, the first ATI Rage arrived late and although they had the power of a Voodoo Graphics, paled against the Voodoo 2 and Riva TNT, ATI’s response was none other than expanding the number of texture units from 1 to 2 and put a 128-bit bus, which they sold under the name of ATI Rage 128.

Another card that stood out within the ATI Rage range was the FURY MAXX, which was the first ATI graphics card to implement what in the future would end up being Crossfire technology, this allowed ATI to place two Rage 128 chips in one card.

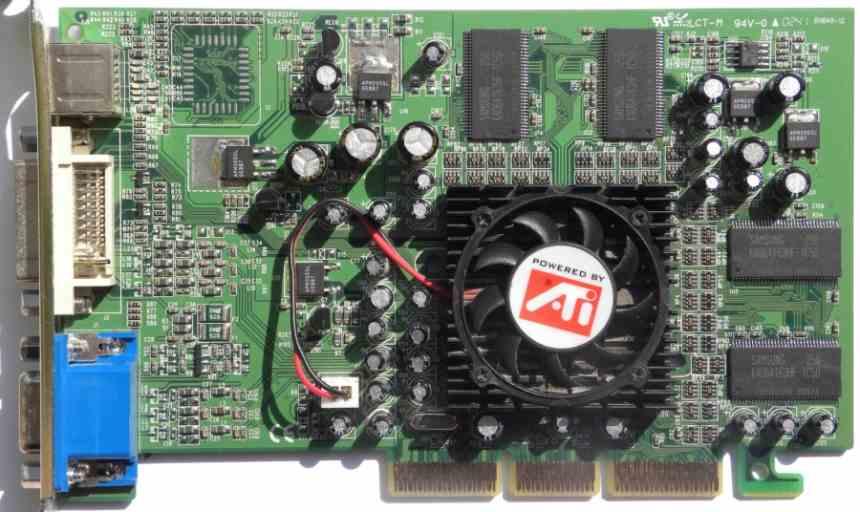

The first of the ATI Radeon graphics

ATI ditched the Rage brand starting with the first Radeon, which was the company’s first DirectX 7 support card. It made use of the ATI R100 GPU that had initially been named as Rage 7, in reality it was nothing more than a Rage 128 with an integrated T&L unit and is that the full support of DirectX 7, implied the implementation of units that calculate the geometry of the scene when rendering 3D scenes in real time.

So the Radeon 7500 at heart was nothing more than a Rage 128 with the fixed function units for the geometry calculation. It did not have the same performance as the first and second generation GeForces that came out at the same time, but it was the turning point of the company to make the necessary change.

But what left the way for ATI to become the rival of NVIDIA for years, the reason for this was the disappearance and subsequent purchase of 3Dfx by NVIDIA and the collapse of S3 and Matrox with graphics cards that were not available. the height.

The purchase of ArtX by ATI

The ATI Technologies of the 2000s owes a lot to the purchase of a small company of former Silicon Graphics engineers called ArtX. When ATI bought it, their most recent work had been the graphics chip for the Nintendo GameCube console, although they had previously worked on Nintendo 64 and some of its engineers on the legendary Silicon Graphics Reality Engine.

The purchase was important because its human capital and know-how turned ATI completely, making it possible to look at each other against NVIDIA. Paradoxically, despite the fact that ATI Technologies has never designed a GPU for a Nintendo console, the purchase of ArtX made its logo appear on Nintendo consoles with ArtX, GameCube and Wii technology.

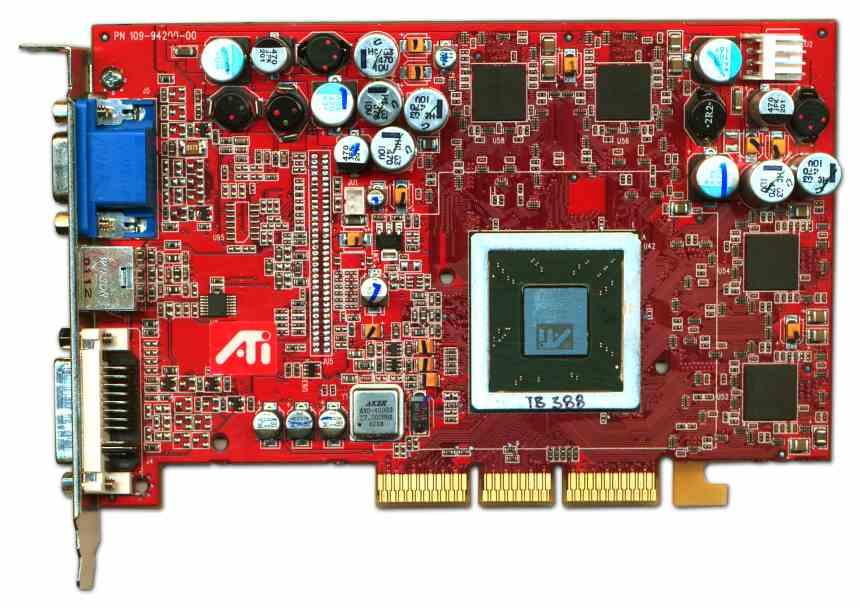

Radeon 9700, the beginning of the golden age

The ATI Radeon 9700 under the R300 chipset became one of the most important graphics cards in ATI’s history, if not the most important of all, and is equivalent to the first NVIDIA GeForce in terms of impact.

In order to be competitive, AMD bought the startup ArtX, which was founded by the same former Silicon Graphics engineers who had worked on the 3D technology of the Nintendo64 and Nintendo GameCube consoles. The result of the purchase? The R300 GPU, with which ATI was at the forefront.

In addition, they had the perfect storm, since the launch of the Radeon 9700 coincided with the biggest skid of NVIDIA in all its history, the GeForce FX, the legend of the ATI Radeon had begun and the rivalry between NVIDIA and ATI began from that point in the story.

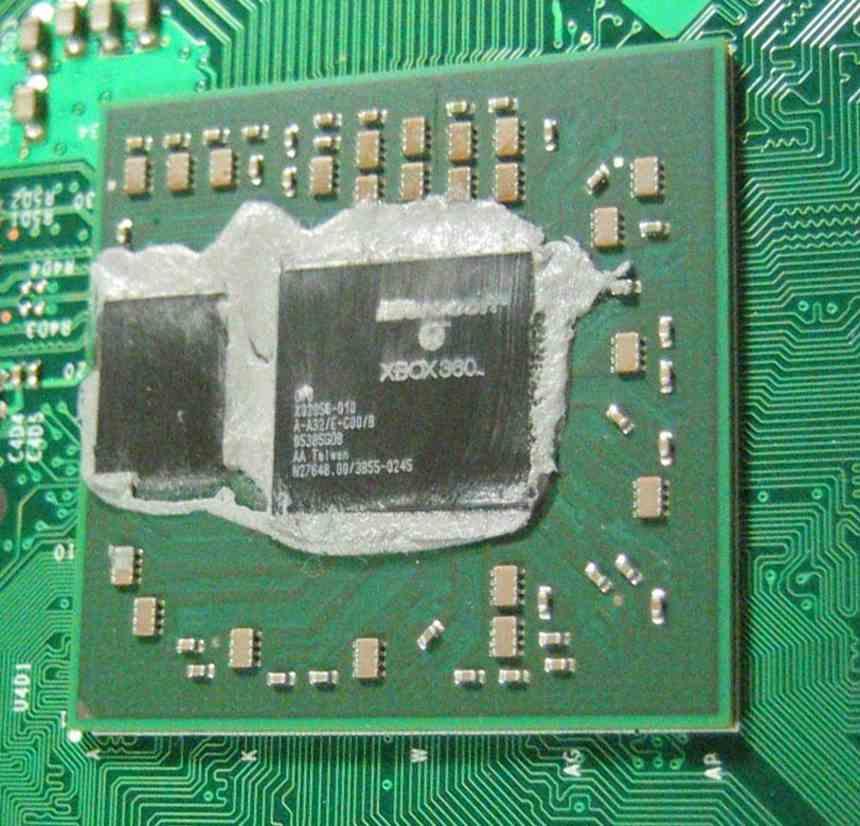

Xenos, ATI’s first console GPU

Originally Microsoft was going to launch what was going to be Windows Vista in 2003 or 2004 under the codename Longhorn and with it a new version of its DirectX, 10, which was not the one that was finally released for PC but a more cropped that did not appear at the end and for which ATI Technologies designed a GPU, which was the first to have the same unit to run the different types of shader.

When Windows Longhorn did not come out, the codename of Vista at that time. ATI together with Microsoft decided to reposition it as the ATI Xenos of Xbox 360. Being the first ATI design in a video game console and opening a collaboration between ATI and Microsoft, now part of AMD, which still lasts today with the Xbox Series . And also getting a future collaboration with SONY for the PlayStation. It is for all this that it is one of the most important graphics chips in the history of ATI.

ATI Radeon HD 2000

The first ATI graphics card with unified PC shaders appeared later than the GeForce 8800, despite being implemented by ATI for the first time in history with the Xbox 360 GPU.

However, the architecture was completely different and you cannot compare the GPU of the Microsoft console with the architecture of the R600, which, in its most powerful version, the Radeon HD 2900 had about 320 ALUs, which under the architecture Terascale is 64 VLIW5 units and therefore 4 Compute Units.

However, the ATI R600 GPUs on which the Radeon HD 2000 were based were a disappointment, due to the fact that they were not able to compete head-to-head against the NVIDIA GeForce GTX 8800. A year later, ATI launched the HD series. 3000 under the 55nm node and polishing some design flaws of the R600 architecture, especially its internal communication ring.

ATI Radeon HD 4870

Following the fiasco of the R600 architecture variants, AMD decided to scale the R600 architecture from 320 ALUSs to 800 ALUs, thereby creating the R700 architecture that started with the ATI Radeon HD 4000 that the ATI Radeon HD 4870 became. in the absolute queen, ATI had once again regained the throne in terms of performance and was once again facing direct competition.

ATI Radeon HD 5000

Instead of designing a completely new architecture from scratch for DirectX 11, at ATI they decided to release the R800 chipset, which was a R700, but optimized and enhanced for DirectX 11, well, it wasn’t actually optimized for DirectX 11, but ATI I release Terascale 2 as a way to get out of the way and with very little changes really.

One of the novelties of DirectX 11 were Compute Shaders, but initially it was raised by unifying the list of drawing on screen and computing as one. It was a huge disaster for both ATI and NVIDIA, but ATI at the time was rather focused on being able to make possible the AMD idea, of which it was already a part, of uniting CPU and GPU on a single chip. So all his efforts were focused on the AMD Fusion project.

The ATI Technologies 5000 series was the end of the ATI brand in graphics cards, which was renamed thereafter into the Radeon Technology Group or RTG and continues to this day.