NVIDIA Ampere graphics cards were the first to incorporate GDDR6X graphics memory, the next step in terms of performance to GDDR6. Thanks to this memory, they have managed to equal the 21 Gbps of bandwidth that we had at that time, but they have achieved it in an innovative way that provides multiple advantages … and some disadvantages. Was the change worth it? Let’s see it.

As we have already mentioned, GDDR6X graphics memory is not a JEDEC standard , but is a design that in this case is exclusive to Micron Technology, a new memory technology that allows you to raise performance to new heights. We are not going to go into assessing the advantages and disadvantages of the fact that a manufacturer has exclusive rights to the technology, but we are going to see if the change with respect to GDDR6 has been worth it or not.

GDDR6X vs GDDR6, how are they different?

At this point, no one will have doubts that the GDDR6X represents a leap in many respects over GDDR6 graphics memory (which is still popular in most gaming laptops under 1500), so if anyone had any doubts they can already dispel them: in terms of performance, the GDDR6X is superior, and this is the reason why which NVIDIA has risked introducing in its Ampere architecture graphics cards, no more, no less.

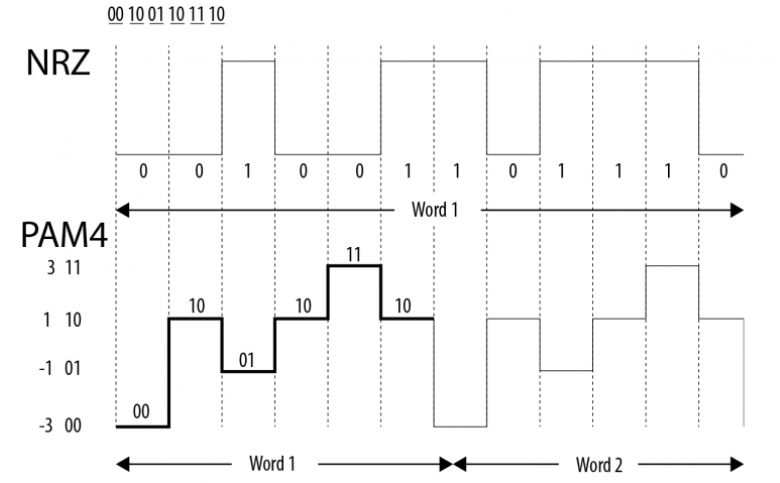

To achieve this increase in performance, Micron has used a technology that was already used in network electronics without going any further, and that is to make use of the PAM4 encoding as opposed to the NRZ encoding of GDDR6 . PAM stands for Pulse Amplitude Modulation, and what it does is that it is capable of generating various binary values using the amplitude of the electrical signal.

Thus, while NRZ encoding is only capable of generating ones and zeros, PAM4 is capable of generating four binary values per clock cycle, so it is capable of encoding 2 bits per cycle compared to only 1 of NRZ, thus doubling the width band.

Despite the fact that with PAM4 encoding, GDDR6X memory is capable of doubling the bandwidth compared to GDDR6, actually for now they have left their bandwidth at the same 21 Gbps that were already achieved with a high frequency in GDDR6. Why do this? Because they get the same bandwidth with a lower speed, which translates into many advantages:

- Less heat generated.

- Less consumption.

- Less voltage.

- Greater margin for overclocking.

- They could potentially double performance.

Micron boasts that it has reimagined memory with this new technology, and NVIDIA has bet very hard on it. Of course, the potential it provides is enormous, and you can reach memory performance levels never seen before.

Is it worth the change?

Now, using this type of graphics memory also has a series of technical disadvantages, in addition to the one already mentioned, which is nothing more than the fact that Micron manufactures it exclusively, regulating its price and production (this makes the GDDR6X more expensive and scarce than GDDR6).

By using the PAM4 encoding, we have already explained that 4 binary values are generated per clock cycle, which allows encoding 2 bits per cycle versus 1 of the NZR encoding. This makes the voltage controller much more important, and a fault in its sensitivity could cause memory errors, causing at least read / write errors in the VRAM and leading to artifact problems, hangs, blue screens , etc.

This is not to say that the GDDR6X is going to have memory errors far from it, but it will simply be much more sensitive and prone to them and will be much more dependent on the controller. The good part is that since it works at a lower voltage and generates less heat, this disadvantage is partially offset.

Answering the question of whether or not the switch to this new memory technology has been worth it, of course the answer is yes. Although it is not currently taking advantage of its full potential in terms of performance, there is the potential to double the memory bandwidth, and for now they have already managed to considerably reduce consumption and the heat generated, which are already perks that are well worth it.

Now, what the future holds in the VRAM arena remains to be seen. NVIDIA has a lot of potential to be exploited with this type of memory, but we are still at the expense of the “answer” on the part of AMD in this regard, as they have also made their promises. On the other hand, NVIDIA has been a pioneer in using GDDR6X, but we cannot forget that AMD were the forerunners of HBM memory which later turned out to be quite a fiasco.